Quick Links

Swarm Mode is Docker's built-in orchestration system for scaling containers across a cluster of physical machines. Multiple independent clients running Docker Engine pool their resources, forming a swarm.

The feature comes bundled with Docker and includes everything you need to deploy apps across nodes. Swarm Mode has a declarative scaling model where you state the number of replicas you require. The swarm manager takes action to match the actual number of replicas to your request, creating and destroying containers as necessary.

Swarms have much more functionality too. Clusters benefit from integrated service discovery functions, support for rolling updates, and network traffic routing via external load balancers.

Here's how you can use Swarm mode to set up simple distributed workloads across a fleet of machines. You should use Swarm if you want to host scalable applications with redundancy using a standard Docker installation, no other dependencies required.

Creating Your Own Swarm

Make sure you've got Docker installed before you continue. You'll need the full Docker CE package on each machine you want to add to the swarm.

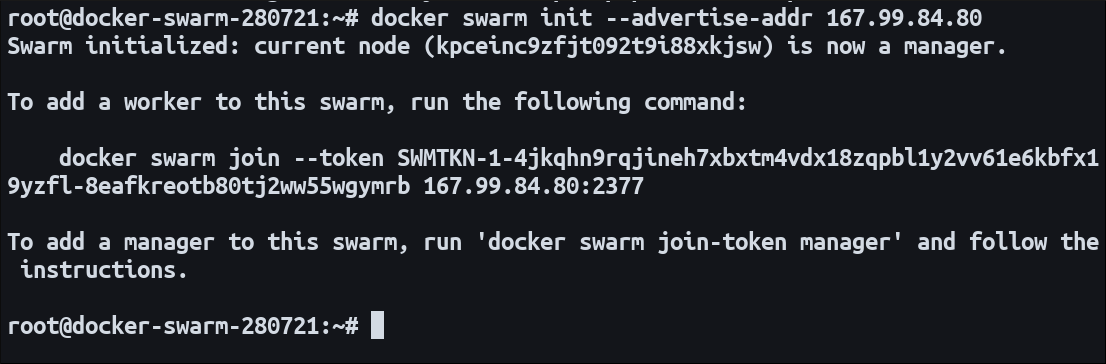

Designate one of your hosts as the swarm manager. This node will orchestrate the cluster by issuing container scheduling requests to the other nodes. Run

docker swarm init

on the manager to start the cluster setup process:

docker swarm init --advertise-addr 192.168.0.1

Replace the IP address with your manager node's real IP. The command will emit a docker swarm join command which you should run on your secondary nodes. They'll then join the swarm and become eligible to host containers.

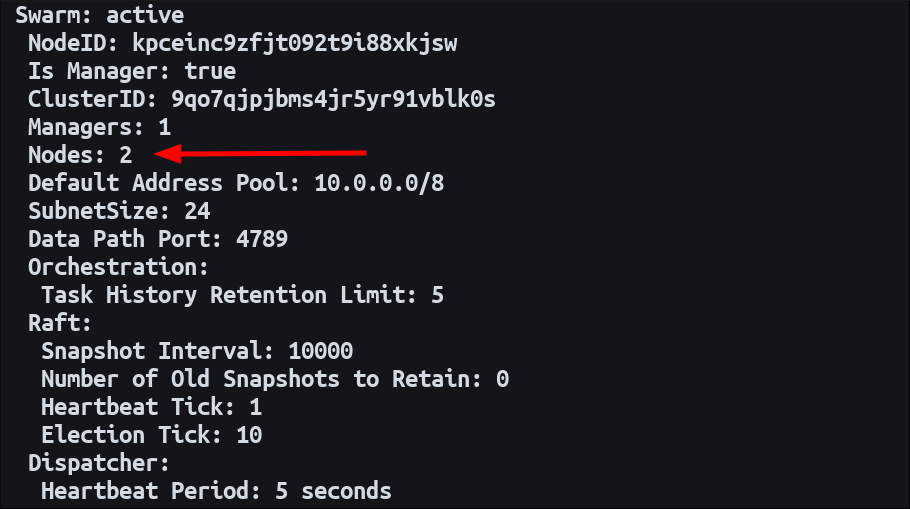

Once you've added your nodes, run docker info on the manager to inspect the cluster's status. The Swarm section of the command's output should be listed as "active." Check the "Nodes" count matches the number of nodes you've added.

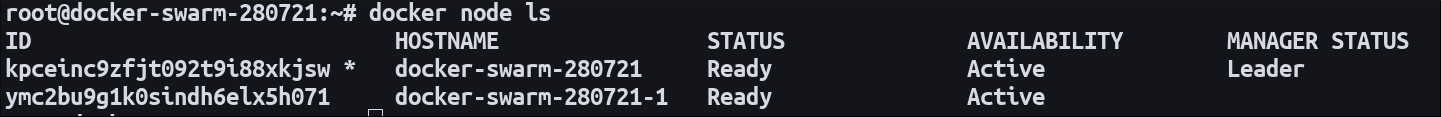

You can get more details about a node by running docker node ls. This shows each node's unique ID, its hostname, and its current status. Nodes that show an availability of "active" with a status of "ready" are healthy and ready to support your workloads. The Manager Status column indicates nodes that are also acting as swarm managers. The "leader" is the node with overall responsibility for the cluster.

Deploying a Container

Once your nodes are ready, you can deploy a container into your swarm. Swarm mode uses the concept of "services" to describe container deployments. Each service configuration references a Docker image and a replica count to create from that image.

docker service create --replicas 3 --name apache httpd:latest

This command creates a service using the httpd:latest image for the Apache web server. Three independent replicas will be created, giving you resiliency against container terminations and node outages. Container replicas are called "tasks" in Docker Swarm parlance.

Docker will continually maintain the requested state. If one of the nodes drops offline, the replicas it was hosting will be rescheduled to the others. You'll have three Apache containers running throughout the lifetime of the service.

Scaling Services

You can scale services at any time using the docker service scale command:

docker service scale apache=5

Docker will add two new container instances so the number of replicas continues to match the requested count. The extra instances will be scheduled to nodes with enough free capacity to support them.

Services can also be scaled with docker service update:

docker service update apache --replicas=5

When you use this variant, you can rollback the change using a dedicated command:

docker service rollback apache

The service will get reverted back to three replicas. Docker will destroy two container instances, allowing the live replica count to match the previous state again.

Rolling Updates

Swarm mode supports rolling updates where container instances are scaled incrementally. You can specify a delay between deploying the revised service to each node in the swarm. This gives you time to act on regressions if issues are noted. You can quickly rollback as not all nodes will have received the new service.

Add the --update-delay flag to a docker service scale command to activate rolling updates. The delay is specified as a combination of hours h, minutes m and seconds s. The swarm manager will update each container instance individually. You can adjust the number of tasks updated in a single operation with the --update-parallelism flag.

Here's how to scale a service to 10 replicas, updating three containers at a time with a five minute delay between each batch:

docker service scale --name apache --replicas=10 --update-delay 5m --update-parallelism 3

Managing Services

Many familiar Docker commands also work with services. Prepend regular container management commands with docker service to list services, view their logs, and delete them.

-

docker service inspect- Inspect the technical data of a named service. -

docker service logs- View log output associated with a named service. -

docker service ls- List all running services. -

docker service ps- Show the individual container instances encapsulated by a specific service. -

docker service rm- Remove a service with all its replicas. There is no confirmation prompt.

Besides the basic management operations described so far, services come with a rich set of configuration options. These can be applied when creating a service or later with the docker service update command.

Service options include environment variables, health check commands, DNS settings, labels, and restart conditions. Running container instances don't usually get destroyed on docker service update unless you're changing settings that require a recreation to take effect.

Exposing Network Ports

Container network ports are exposed with the --publish flag for docker service create and docker service update. This lets you specify a target container port and the public port to expose it as.

docker service create --name apache --replicas 5 --publish published=8080,target=80 nginx:latest

Now you can connect to port 8080 on any of your worker nodes to access an instance of the NGINX service. This works even if the node you connect to isn't actually hosting one of the service's tasks. You simply interact with the swarm and it takes care of the network routing. This approach is called the "routing mesh."

An alternative option lets you publish container ports on the individual nodes where tasks are running. Add mode=host to the --published flag to enable this. The service will only be exposed on the nodes which host it. This is useful in cases where you want to connect to a specific instance of the service. The routing mesh randomizes the instance you connect to, irrespective of the node you use to connect.

Docker Swarm supports overlay networks too. These are similar to regular Docker networks. Joining a service to a network lets its containers communicate with any other services on the network.

docker service create --name service1 --network demo-network my-image:latest

docker service create --name service2 --network demo-network my-image:latest

Tasks created by service1 and service2 will be able to reach each other via the overlay network. A default network called ingress provides the standard routing mesh functionality described above.

Conclusion

Swarm mode is a container orchestrator that's built right into Docker. As it's included by default, you can use it on any host with Docker Engine installed.

Creating a swarm lets you replicate containers across a fleet of physical machines. Swarm also lets you add multiple manager nodes to improve fault tolerance. If the active leader drops out of the cluster, another manager can take over to maintain operations.

Docker Swarm mode compares favorably to alternative orchestration platforms such as Kubernetes. It's easier to get started with as it's integrated with Docker and there are fewer concepts to learn. It's often simpler to install and maintain on self-managed hardware, although pre-packaged Kubernetes solutions like MicroK8s have eroded the Swarm convenience factor. Even so, Swarm mode remains a viable orchestrator for self-hosted workloads, particularly if you're looking for a developer-oriented CLI-driven solution that's less demanding on operations teams.