Kubernetes supports several ways of getting external traffic into your cluster. ClusterIPs, NodePorts, and Ingresses are three widely used resources that all have a role in routing traffic. Each one lets you expose services with a unique set of features and trade-offs.

The Basics

Kubernetes workloads aren't network-visible by default. You make containers available to the outside world by creating a service. Service resources route traffic into the containers within pods.

A service is an abstract mechanism for exposing pods on a network. Each service is assigned a type---either ClusterIP, NodePort, or LoadBalancer. These define how external traffic reaches the service.

ClusterIP

ClusterIP is the default Kubernetes service. Your service will be exposed on a ClusterIP unless you manually define another type.

A ClusterIP provides network connectivity within your cluster. It can't normally be accessed from outside. You use these services for internal networking between your workloads.

apiVersion: v1

kind: Service

spec:

selector:

app: my-app

type: ClusterIP

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

This example manifest defines a ClusterIP service. Traffic to port 80 on the ClusterIP will be forwarded to port 80 at your pods (

targetPort

). Pods with the

app: my-app

metadata field will be added to the service.

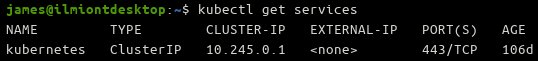

You can see the IP address that's been assigned by running

kubectl get services

. Other workloads in your cluster can use this IP address to interact with your service.

You can manually set a ClusterIP to a specific IP address using the

spec.clusterIp

field:

spec:

type: ClusterIP

clusterIp: 123.123.123.123

The

clusterIp

value must be a valid IP address within the range configured for your cluster. This is defined by the

service-cluster-ip-range

setting in the Kubernetes API server.

NodePort

A NodePort publicly exposes a service on a fixed port number. It lets you access the service from outside your cluster. You'll need to use the cluster's IP address and the

NodePort

number---e.g.

123.123.123.123:30000

.

Creating a NodePort will open that port on every node in your cluster. Kubernetes will automatically route port traffic to the service it's linked to.

Here's an example NodePort service manifest:

apiVersion: v1

kind: Service

spec:

selector:

app: my-app

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

NodePort definitions have the same mandatory properties as ClusterIP services. The only difference is the change to

type: NodePort

. The

targetPort

field is still required, as NodePorts are backed by a ClusterIP service.

Applying the above manifest will assign your NodePort a random port number from the range available to Kubernetes. This usually defaults to ports 30000-32767. You can manually specify a port by setting the

ports.nodePort

field:

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 32000

protocol: TCP

This will route traffic on port 32000 to port 80 in your Pods.

NodePorts aren't often ideal for public services. They use non-standard ports, which are unsuitable for most HTTP traffic. You can use a NodePort to quickly set up a service for development use or to expose a TCP or UDP service on its own port. When serving a production environment to users, you'll want to use an alternative instead.

Ingress

An Ingress is actually a completely different resource to a Service. You normally use Ingresses in front of your Services to provide HTTP routing configuration. They let you set up external URLs, domain-based virtual hosts, SSL, and load balancing.

Setting up Ingresses requires an Ingress Controller to exist in your cluster. There's a wide selection of controllers available. Most major cloud providers have their own Ingress Controller that integrates with their load-balancing infrastructure.

nginx-ingress

is a popular standalone option that uses the NGINX web server as a reverse proxy to get traffic to your services.

You create Ingresses using the

Ingress

resource type. The

kubernetes.io/ingress.class

annotation lets you indicate which kind of Ingress you're creating. This is useful if you're running multiple cluster controllers.

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: my-ingress

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: example.com

http:

paths:

- path: /

backend:

serviceName: my-service

servicePort: 80

- host: another-example.com

http:

paths:

- path: /

backend:

serviceName: second-service

servicePort: 80

This manifest defines two Ingress endpoints. The first

host

rule routes

example.com

traffic to port 80 on the

my-service

service. The second rule configures

another-example.com

to map to pods that are part of

second-service

.

You can configure SSL by setting the

tls

field in your Ingress'

spec

:

spec:

tls:

- hosts:

- example.com

- another-example.com

You'll need an Issuer like Cert Manager in your cluster for this to work. The Issuer will acquire SSL certificates for your domains when your Ingress is created.

Ingresses should be used when you want to handle traffic from multiple domains and URL paths. You configure your endpoints using declarative statements. The Ingress controller will provision your routes and map them to services.

Load Balancers

A final service type is LoadBalancer. These services automatically integrate with the load balancers provided by public cloud environments. You'll need to set up your own load balancer if you're self-hosting your cluster.

Load balancers are used to map external IP addresses to services in your cluster. Unlike Ingresses, there's no automatic filtering or routing. Traffic to the external IP and port will be sent straight to your service. This means that they're suitable for all traffic types.

The implementation of each load balancer is provider-dependent. Some cloud platforms offer more features than others. Be aware that creating a load balancer will often apply additional charges to your bill.

apiVersion: v1

kind: Service

spec:

selector:

app: my-app

type: LoadBalancer

loadBalancerIP: 123.123.123.123

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

Load balancer manifests look similar to the other service types. Traffic to the load balancer

port

that you define will be received by the

targetPort

in your pods.

Your cloud environment will create a new load balancer to handle your traffic. Some providers allow you to request a specific external IP address using the

spec.loadBalancerIP

field. You'll receive a random IP when this isn't supported.

Conclusion

ClusterIPs, NodePorts, Ingresses, and Load Balancers route external traffic to the services in your cluster. Each one is designed for a different use case. Some of your services will make the most sense with a NodePort, while others will need an Ingress, usually when you want to provide a URL.

All four options work in tandem with the broader "service" concept. They're the gatekeepers that enable network access to your services. Those services then handle the last leap into your pods. The two layers of abstraction keep routing fully decoupled from your workloads: Your pods simply expose ports, which the services make publicly accessible.