Quick Links

AWS S3 is Amazon's cloud storage service, allowing you to store individual files as objects in a bucket. You can upload files from the command line on your Linux server, or even sync entire directories to S3.

If you just want to share files between EC2 instances, you can use an EFS volume and mount it directly to multiple servers, cutting out the "cloud" altogether. But you shouldn't use it for everything, because it's much pricier than S3, even with Infrequent Access turned on.

Limit S3 Access to an IAM User

Your server probably doesn't need full root access to your AWS account, so before you do any kind of file syncing, you should make a new IAM user for your server to use. With an IAM user, you can limit your server to only managing your S3 buckets.

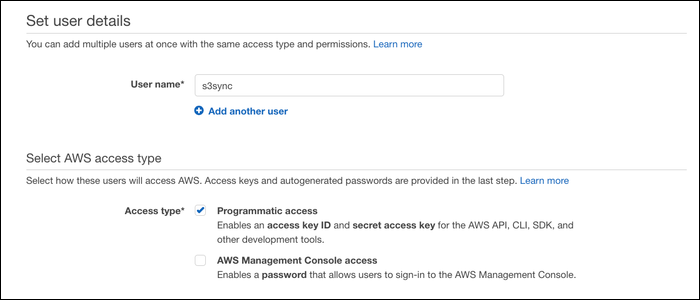

From the IAM Management Console, make a new user, and enable "Programmatic Access."

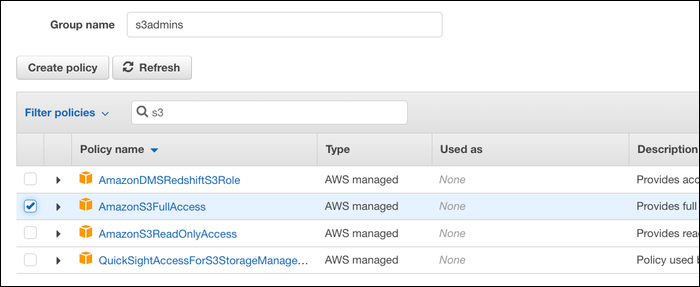

You'll be asked to choose permissions for this user. Make a new group, and assign it the "AmazonS3FullAccess" permission.

After that, you'll be given an access key and secret key. Make a note of these; you'll need them to authenticate your server.

You can also manually assign more detailed S3 permissions, such as permission to use a specific bucket or only to upload files, but limiting access to just S3 should be fine in most cases.

File Syncing With s3cmd

s3cmd is a utility designed to make working with S3 from the command line easier. It's not a part of the AWS CLI, so you'll have to manually install it from your distro's package manager. For Debian-based systems like Ubuntu, that would be:

sudo apt-get install s3cmd

Once s3cmd is installed, you'll need to link it to the IAM user you created to manage S3. Run the configuration with:

s3cmd --configure

You'll be asked for the access key and secret key that the IAM Management Console gave you. Paste those in here. There's a few more options, such as changing the endpoints for S3 or enabling encryption, but you can leave them all default and just select "Y" at the end to save the configuration.

To upload a file, use:

s3cmd put file s3://bucket

Replacing "bucket" with your bucket name. To retrieve those files, run:

s3cmd get s3://bucket/remotefile localfile

And, if you want to sync over a whole directory, run:

s3cmd sync directory s3://bucket/

This will copy the entire directory into a folder in S3. The next time you run it, it will only copy the files that have changed since it was last ran. It won't delete any files unless you run it with the --delete-removed option.

s3cmd sync won't run automatically, so if you'd like to keep this directory regularly updated, you'll need to run this command regularly. You can automate this with cron; Open your crontab with crontab -e, and add this command to end:

0 0 * * * s3cmd sync directory s3://bucket >/dev/null 2>&1

This will sync "directory" to "bucket" once a day. By the way, if crontab -e got you stuck in vim, you can change the default text editor with export VISUAL=nano;, or whichever you prefer.

s3cmd has a lot of subcommands; you can copy between buckets with cp, move files with mv, and even create and remove buckets from the command line with mb and rb, respectively. Use s3cmd -h for a full list.

Another Option: AWS CLI

Beyond s3cmd, there are a few other command line options for syncing files to S3. AWS provides their own tools with the AWS CLI. You'll need Python 3+, and can install the CLI from pip3 with:

pip3 install awscli --upgrade --user

This will install the aws command, which you can use to interact with AWS services. You'll need to configure it in the same way as s3cmd, which you can do with:

aws configure

You'll be asked to enter the access key and secret key for your IAM user.

The syntax for AWS CLI is similar to s3cmd. To upload a file, use:

aws s3 cp file s3://bucket

To sync a whole folder, use:

aws s3 sync folder s3://bucket

You can copy and even sync between buckets with the same commands. You can use aws help for a full command list, or read the command reference on their website.

Full Backups: Restic, Duplicity

If you want to do large backups, you may want to use another tool rather than a simple sync utility. When you sync to S3 with s3cmd or the AWS CLI, any changes you've made will overwrite the current files. Because the main worry of cloud file storage isn't usually drive failure, but accidental deletion without access to revision history, this is a problem.

AWS supports file versioning, which solves this issue somewhat, but you may still want to use a more powerful backup program to handle it yourself, especially if you're doing full-drive backups.

Duplicity is a simple utility that backs up files in the form of encrypted TAR volumes. The first archive is a complete backup and then any subsequent archives are incremental, storing only the changes made since the last archive.

This is very efficient, but restoring from a backup is less efficient, as the restoration process will have to follow the chain of changes to arrive at the final state of the data. Restic solves this issue by storing data in deduplicated encrypted blocks, and keeps a snapshot of each version for restoration. This way, the current state of the files is easily referenceable, and each revision is still accessible.

Both tools can be configured to work with AWS S3, as well as multiple other storage providers. Alternatively, if you just want to back up EBS-based EC2 instances, you can use incremental EBS snapshots, though it is pricier than backing up manually to S3.