Quick Links

Setting up database backups is the most important thing to handle as a database administrator. While Database-as-a-Service platforms like RDS support automatic backups out of the box, if you're running your own server, you'll need to set up backups yourself.

What Is The Best Backup Strategy?

The most headache-free backup strategy is to use a fully managed Database-as-a-Service, and configure automatic backups from their control panel. This includes services like AWS RDS and DocumentDB, as well as Mongo's Atlas, which both support automatic backups to S3. However, not everyone is using those, and if you aren't, you'll need to handle them yourself.

If you don't want to handle them yourself, but still need to be running a database on your own hardware, you can try Mongo's Cloud Manager, which costs $40 per month per server, and supports automatic backups as well as operations monitoring. This is by far the best option for sharded clusters and replica sets, so if you're using anything more than a single database, you'll want to set this up.

However, nothing beats free, and you can of course set up backups yourself with a simple cron job. You have two options---back up the underlying files with a filesystem snapshot, or run mongodump. Both are valid methods, and can both be done on a running database, so the option is up to you. We'll be going with mongodump as it's much simpler, but if you have a very large database, you may want to use filesystem snapshots instead.

Setting Up mongodump and S3

First, you'll need the AWS CLI installed and configured with an IAM account that can access the target bucket. You'll also need to create a bucket that will house the backups.

Then, copy this script onto the server:

export HOME=/home/ubuntu/

HOST=localhost

# DB name

DBNAME=database

# S3 bucket name

BUCKET=backups

# Linux user account

USER=ubuntu

# Current time

TIME=`/bin/date +%d-%m-%Y-%T`

# Backup directory

DEST=/home/$USER/tmp

# Tar file of backup directory

TAR=$DEST/../$TIME.tar

# Create backup dir (-p to avoid warning if already exists)

/bin/mkdir -p $DEST

# Log

echo "Backing up $HOST/$DBNAME to s3://$BUCKET/ on $TIME";

# Dump from mongodb host into backup directory

/usr/bin/mongodump -h $HOST -d $DBNAME -o $DEST

# Create tar of backup directory

/bin/tar cvf $TAR -C $DEST .

# Upload tar to s3

/usr/bin/aws s3 cp $TAR s3://$BUCKET/ --storage-class STANDARD_IA

# Remove tar file locally

/bin/rm -f $TAR

# Remove backup directory

/bin/rm -rf $DEST

# All done

echo "Backup available at https://s3.amazonaws.com/$BUCKET/$TIME.tar"

This sets a bunch of variables, including the HOME variable for cron compatibility, as well as the database and bucket settings. It then creates a ~/tmp folder to save the dump to, and runs mongodump on the target database. It runs tar to backup the directory (since mongodump saves separate files for each collection), and then uploads the file to S3, storing it in the given bucket in the Infrequent Access tier, which saves money on storage costs and is ideal for this use case.

If you run this script, you should see a new backup tar in the target bucket. If it works, open up your crontab with:

crontab -e

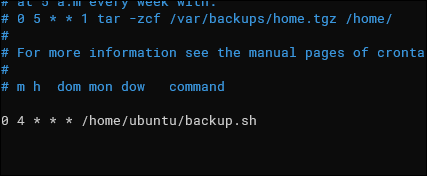

And add a new line for this script:

You'll likely want to set it to * * * * * to have it run every minute, just to make sure it works from cron. Once you've confirmed it does, you can set it to something more reasonable, like once or twice per day. You can use this online cron scheduler to make sure your schedules are in order.

Setting Up An S3 Lifecycle

Since these backups are just dumps of the database, you probably don't want to store more than a week or two worth of them, as they'll fill up your S3 bucket pretty quick otherwise.

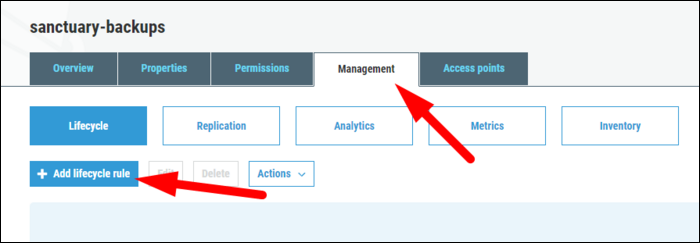

S3 can handle rotating objects out of the buckets with S3 Lifecycles. From the bucket settings, click "Management" and "Add Lifecycle Rule."

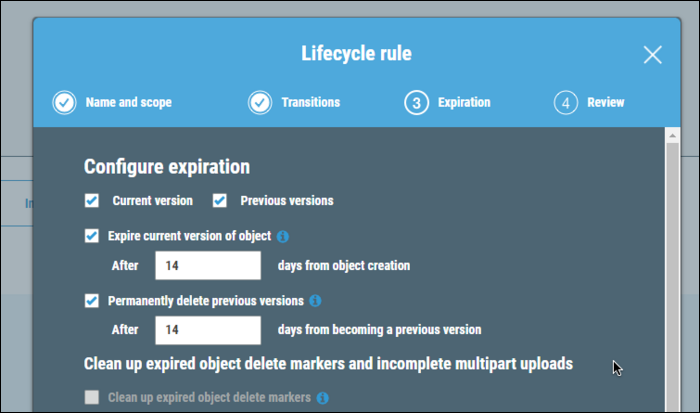

Set it to apply to all objects in the bucket, and give it a name. Skip over Transitions.

Under Expiration, you can configure it to delete objects after a certain number of days in the bucket. You can also delete previous versions of objects, but since the script is timestamping the backups, it's not necessary.

Click create, and the policy will be in effect.