Quick Links

Docker is a developer-oriented containerization platform that lets you package applications as standalone containers. They'll run anywhere a compatible container runtime is available.

Docker's popularity has made it almost synonymous with containers, yet it's not the perfect technology for all use cases. Using Docker as-is presents challenges in production as its CLI is only equipped to manage individual containers.

Kubernetes is an orchestration platform that manages stacks of containers and scales them across multiple servers. You can deploy across a fleet of physical machines, improving your service's redundancy and resiliency. Here's how you can start your own cluster to scale your "Docker" containers.

Kubernetes Basics

Recognizing some key Kubernetes terms will help you understand the differences compared to Docker. The Kubernetes dictionary describes dozens of resources you can add to your cluster. Only the components which control container scheduling are relevant to this article.

At a high level, a Kubernetes installation is a cluster of "nodes." Nodes are independent physical machines that host your workloads. A single "master" node is responsible for coordinating ("orchestrating") the cluster's operations by "scheduling" new containers to the most appropriate worker node.

Here are some critical terms:

- Master - The master node operates the cluster. This is the machine which you install Kubernetes onto. It runs the control plane and delegates the hosting of containerized apps to worker nodes.

- Control Plane - The control plane is the software component of the master node. It incorporates several services, including an API server, configuration store, and container scheduler.

- Node - A node is a machine that hosts your containers. Each worker runs a Kubernetes component called Kubelet. This stays in contact with the control plane, receiving scheduling instructions which it acts on to provision new containers.

- Pod - A Pod is the smallest compute unit in a Kubernetes cluster, representing a group of container instances. The Pod abstraction lets you interact with multiple running containers in aggregate.

- Replica Set - Replica sets are responsible for scaling Pods to ensure a specified number of replicas are available. If you ask for three replicas of a Pod, the replica set will guarantee the availability constraint is met. Pods are automatically replaced if they fail, letting you automate container replication across servers.

Creating a scalable cluster requires a master node, at least two worker nodes, and a deployment that provisions multiple replicas. Kubernetes will be able to schedule your Pods across all the available nodes, giving you resilience in the event one suffers an outage.

Creating Your Cluster

Kubernetes is available as a managed offering from most major cloud providers. These provide a one-click way to create your control plane and add a variable number of worker nodes.

You can run a cluster on your own hardware using a self-contained Kubernetes distribution like MicroK8s. You'll need at least two isolated physical or virtual machines if you want to have redundant scheduling support.

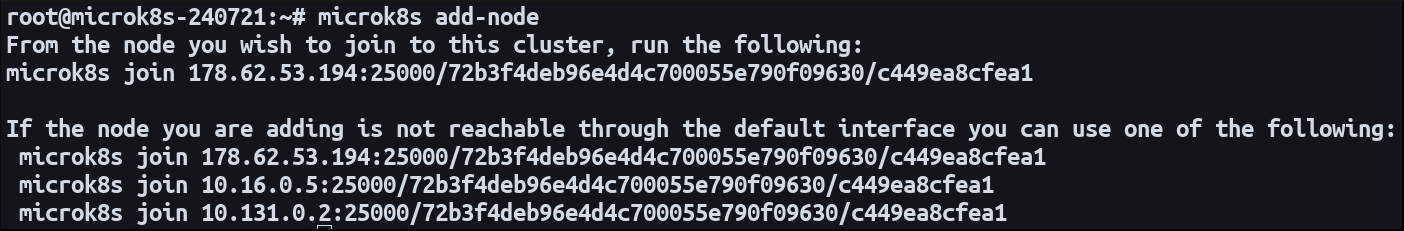

Install MicroK8s on both of the machines. Designate one node as the master and use the

add-node

command to start the node registration process:

microk8s add-node

This will emit a microk8s join command. Switch to your secondary node and run the command. It will join the first cluster as a new worker. Now both machines are ready to host your containerized workloads.

Defining a Horizontally Scaling Deployment

An application that runs across multiple servers is described as "horizontally scaled." It's spreading itself out across several distinct environments. Vertically scaling a system involves adding resources to an existing environment.

The simplest way to define a Kubernetes workload is with a Deployment. This resource type creates Pods from a container image and sets up public networking routes via a service. Deployments default to a single Pod instance but can be configured with multiple replicas.

Here's a simple Deployment manifest:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Apply the manifest to your cluster using Kubectl:

microk8s kubectl apply -f ./manifest.yaml

Kubernetes will create three Pods, each hosting an NGINX web server created from the nginx:latest image. Port 80 is exposed as a container port to enable inbound traffic.

Pods will automatically be distributed across your cluster's nodes. A node will be eligible to host a Pod if it can provide sufficient resources.

You can update the number of replicas at any time. Change the replicas field in your manifest and re-apply it to your cluster. The Kubernetes scheduler will take action to provision new Pods or terminate old ones as required. If you scale replicas down to 0, you can take your application offline without actually deleting the deployment or its associated resources.

Dynamically Adding Nodes

Updating the replica count lets you utilize the existing resources within your cluster. Eventually, you might exhaust your combined node capacity altogether, preventing new Pods from being scheduled. Kubernetes offers a cluster auto-scaling facility that can create and destroy Nodes , effectively changing the number of Pods that can be scheduled.

Setting up auto-scaling is relatively involved. The exact process is dependent on your cluster's hosting environment. It requires integration with a Cluster Autoscaler capable of hooking into your host to detect changes in demand. Official documentation is available for Google Cloud; solutions are also available from Amazon EKS and Microsoft AKS.

Auto-scaling works by maintaining a constant check for Pods that can't be scheduled due to insufficient cluster capacity. It also assesses whether the successfully scheduled Pods could actually be colocated with a reduced node count. The auto-scaler then utilizes your cloud provider's API to add and remove compute instances, dynamically adjusting your cluster's resources. This may impact your bill as creating a new instance is usually a chargeable operation.

Summary

Kubernetes makes it easy to distribute container instances across multiple servers. Register your machines as nodes, or use a managed cloud cluster, then create Deployments that have the replicas field set. You can scale your workload by updating the requested replicas count.

When severe changes in demand are anticipated, use auto-scaling to dynamically expand capacity. This feature lets you create new Nodes on the fly, adding extra resources so you can keep raising your replica count.

When you're scaling Pods, you do need to remember that some form of inbound traffic distribution is also required. If you're exposing port 80, Kubernetes needs to be able to map requests to port 80 on any of the nodes. This works automatically with Deployments and Services. Kubernetes creates a Load Balancer which continuously monitors Pods to work out where traffic can be directed.