Quick Links

Whether you're upgrading to more powerful servers, moving to new regions, or adding new instances, migrating a Linux server can be made easier by implementing the proper strategies and knowing the right commands. We'll discuss how to move your server to a new machine with minimal hassle.

Migration Strategies

The simplest and most effective strategy is a blue green deployment---get the new server up and running, and then when it's production ready, switch traffic over to it, and remove the old server once you've verified there are no issues. With load balancing this process can happen incrementally, further reducing chances of availability problems.

A blue-green deployment involves copying over all the files, packages, and code on the old server over to the new one. This can be as simple as manually installing the necessary packages, such as an NGINX web server, and then copying over configuration from the existing server. You can also do a full disk backup, and create a new server from that.

Of course, now is a perfect time to consider whether you can use containers or auto-scaling. Docker containers can be easily stopped, started, and migrated by copying the underlying data volumes (or using a shared data store like EFS). Auto Scaling differs depending on providers, but if you're adding a new copy of your server to meet growing demand, it may be right for your business. You can also use auto-scaling with Docker containers on many platforms like AWS ECS.

The setup for both containers and auto-scaling requires you to do much of the same work you'll have to do to transfer the server manually, such as automating installing packages and your own code, so if you plan on migrating again in the future, you should consider now if you'd be better off switching to containers, or setting up auto-scaling.

If you're interested in containers, you can read our guide to getting started with Docker to learn more, or read our guide to using auto-scaling on AWS or Google Cloud Platform.

Installing Packages

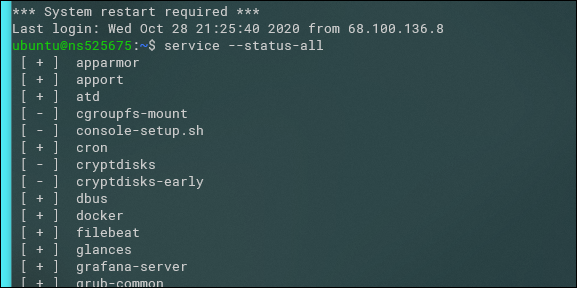

If you're not quite sure what you have installed on the old server, the best method to check is to get a list of all the installed services. This will show most of the major things you'll need to install:

service --status-all

The reason for prefering listing services is because the list of installed packages can be very long, with every minor dependency also being installed. My Ubuntu test server had over 72000 packages installed, so the list of them isn't very useful considering they will all get installed anyway when installing the major services the new server needs.

If you want, you can list all of them with the following command:

sudo apt list --installed

The search the package list for a specific package, you can use:

sudo apt -qq list program_name --installed

Either way, you'll want to make a list of the packages you need to install, and install them on the new server.

Transferring The Server's Disk With rsync

You could archive the disk with tar, but tar is generally meant to archive single files or directories, not an entire disk. If you're moving a lot of data, you may not have enough space to make a backup locally (perhaps that's even the reason for the upgrade!).

In this case, you'll want to use the rsync command to upload up the data directly to the target server. rsync will connect over SSH and sync up the two directories; in this case, we want to push the local directory to the remote server, like so:

rsync -azAP /etc/nginx username@remote_host:/etc/nginx

That's the whole command---you should see a progress bar as it transfers (using compression with the -z flag), and when it's done, you'll see the files in the target directory on the new server. You may have to run this multiple times to copy each directory; you can use this online rsync command generator to generate the command for each run.

If you want, you can try copying the entire root filesystem to the new server, excluding some system files:

sudo rsync -azAP / --exclude={"/dev/*","/proc/*","/sys/*","/tmp/*","/run/*","/mnt/*","/media/*","/lost+found"} username@remote_host:/

If you're just looking to make a backup of a few directories, you can use a simple tar command instead to generate a single file archive:

tar -czvf nginxconfig.tar.gz /etc/nginx

This outputs one file that you can transfer to the target server with scp or over FTP. Then, extract the file to the target directory:

tar -xzvf nginxconfig.tar.gz -C /etc/nginx

Transferring a Database

If you need to transfer a database, you'll want to backup and dump the source database. For MySQL, that would be:

mysqldump -uUser -pPass -hHost --single-transaction database > backup.bak

For MongoDB, that would be:

mongodump --host=mongodb.example.net --port=27017

Then you'll need to restore the database on the target server. For MySQL, that would be:

mysql -u [user] -p [database_name] < [filename].sql

and for MongoDB, that would be:

mongorestore <options> <connection-string> <directory or file to restore>

For other databases, you should be able to find the relevant commands online.

Switching IPs To The New System

Of course, you'll want to verify that everything works as intended before proceeding, but once it is, you'll want to switch traffic over to the new server.

The simplest way to do this is to change your DNS records. Once updated, clients and services will be sent to the new server. This happens all at once though, so if you have a load balancer, it would be better to slowly transition traffic to the new instance.

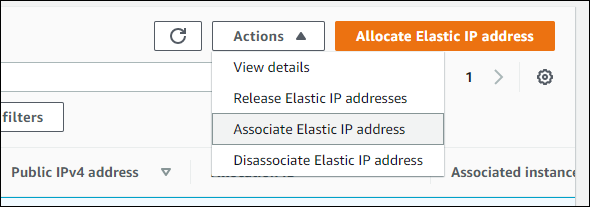

If you're on AWS, or a similar provider with Elastic IP addresses, you can swap over the address to point to the new server, which won't require a DNS update. From the Elastic IPs tab in the EC2 console, Action > Associate Elastic IP Address.

This will allow you to modify the association, which will instantly swap traffic to the new instance.