Quick Links

Sorting a log file by a specific column is useful for finding information quickly. Logs are usually stored as plaintext, so you can use command line text manipulation tools to process them and view them in a more readable manner.

Extracting Columns with cut and awk

The cut and awk utilities are two different ways to extract a column of information from text files. Both assume your log files are whitespace delimited, for example:

column column column

This presents an issue if the data within the columns contains whitespaces, such as dates ("Wed Jun 12"). While cut may see this as three separate columns, you can still extract all three of them at once, presuming the structure of your log file is consistent.

cut is very simple to use:

cat system.log | cut -d ' ' -f 1-6

The cat command reads the contents of system.log and pipes it to cut. The -d flag specifies the delimiter, in this case being a whitespace. (The default is tab, t.) The -f flag specifies which fields to output. This command specifically will print the first six columns of system.log. If you wanted to only print the third column, you'd use the -f 3 flag.

awk is more powerful but not as concise. cut is useful for extracting columns, like if you wanted to get a list of IP addresses from your Apache logs. awk can rearrange entire lines, which can be useful for sorting an entire document by a specific column. awk is a full programming language, but you can use a simple command to print columns:

cat system.log | awk '{print $1, $2}'

awk runs your command for each line in the file. By default, it splits the file up by whitespaces and stores each column in variables $1, $2, $3, and so on. By using the print $1 command, you can print the first column, but there's no easy way to print a range of columns without using loops.

One benefit of awk is that the command can reference the whole line at once. The contents of the line are stored in variable $0, which you can use to print the whole line. So, you could, for example, print the third column before printing the rest of the line:

awk '{print $3 " " $0}'

The " " prints a space between $3 and $0. This command repeats column three twice, but you can work around that by setting the $3 variable to null:

awk '{printf $3; $3=""; print " " $0}'

The printf command does not print a new line. Similarly, you can exclude specific columns from the output by setting them all to empty strings before printing $0:

awk '{$1=$2=$3=""; print $0}'

You can do a lot more with awk, including regex matching, but the out-of-the-box column extraction works well for this use case.

Sorting Columns with sort and uniq

The sort command can be used to order a list of data based on a specific column. The syntax is:

sort -k 1

where the -k flag denotes the column number. You pipe input into this command, and it spits out an ordered list. By default, sort uses alphabetical order but supports more options through flags, such as -n for numerical sort, -h for suffix sort (1M > 1K), -M for sorting month abbreviations, and -V for sorting file version numbers (file-1.2.3 > file-1.2.1).

The uniq command filters out duplicate lines, leaving only unique ones. It only works for adjacent lines (for performance reasons), so you'll need to always use it after sort to remove duplicates throughout the file. The syntax is simply:

sort -k 1 | uniq

If you'd like to only list the duplicates, use the -d flag.

uniq can also count the number of duplicates with the -c flag, which makes it very good for tracking frequency. For example, if you wanted to get a list of the top IP addresses hitting your Apache server, you could run the following command on your access.log:

cut -d ' ' -f 1 | sort | uniq -c | sort -nr | head

This string of commands will cut out the IP address column, group the duplicates together, remove the duplicates while counting each occurence, then sort based on the count column in descending numerical order, leaving you with a list that looks like:

21 192.168.1.1

12 10.0.0.1

5 1.1.1.1

2 8.0.0.8

You can apply these same techniques to your log files, in addition to other utilities like awk and sed, to pull useful information out. These chained commands are long, but you don't have to type them in every time, as you can always store them in a bash script or alias them through your ~/.bashrc.

Filtering Data with grep and awk

grep is a very simple command; you give it a search term and pass it input, and it will spit out every line containing that search term. For example, if you wanted to search your Apache access log for 404 errors, you could do:

cat access.log | grep "404"

which would spit out a list of log entries matching the given text.

However, grep can't limit its search to a specific column, so this command will fail if you have the text "404" anywhere else in the file. If you want to only search the HTTP status code column, you'll need to use awk:

cat access.log | awk '{if ($9 == "404") print $0;}'

With awk, you also have the benefit of being able to do negative searches. For example, you can search for all log entries that didn't return with status code 200 (OK):

cat access.log | awk '{if ($9 != "200") print $0;}'

as well as having access to all the programmatic features awk provides.

GUI Options for Web Logs

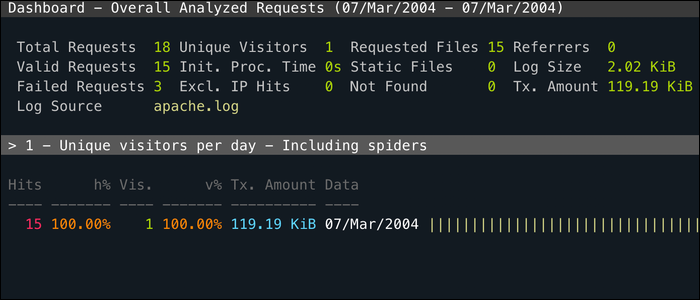

GoAccess is a CLI utility for monitoring your web server's access log in real time, and sorts by each useful field. It runs entirely in your terminal, so you can use it over SSH, but it also has a much more intuitive web interface.

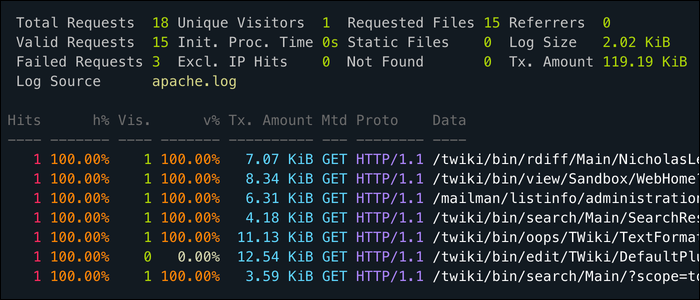

apachetop is another utility specifically for apache, that can be used to filter and sort by columns in your access log. It runs in real time directly on your access.log.