Quick Links

Docker is a containerization platform that simplifies the packaging and execution of applications. Containers run as isolated processes with their own filesystem but share their host's kernel. Docker has risen to prominence as a way of implementing reproducible development environments and distributed deployment architectures.

Node.js is the leading JavaScript runtime for backend development. Successfully launching a Node.js web service requires you to have an environment with the runtime installed, your application code available, and a mechanism that handles automatic restarts in case of a crash.

In this guide we'll use Docker to containerize a simple Node.js app created with the popular Express web framework. Docker is a good way to deploy Node-based systems as it produces a consistent environment that includes everything you need to run your service. The Docker daemon has integrated support for restarting failed containers when their foreground process crashes, solving one of the challenges of Node.js deployments.

Creating Your Node Project

We'll skip the details of implementing your application. Create a directory for your project and add some server code inside it. Here's a basic

app.js

that listens on port 8080 and responds to every request with a hardcoded response:

const express = require("express");

const app = express();

app.get("*", (req, res) => res.send("<p>It works!</p>"));

app.listen(8080, () => console.log("Listening on 8080"));

Add Express to your project using npm:

npm init

npm install --save express

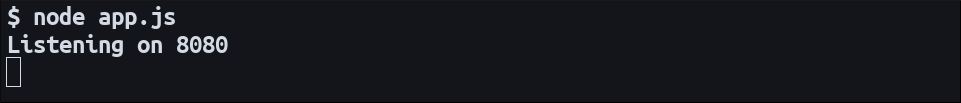

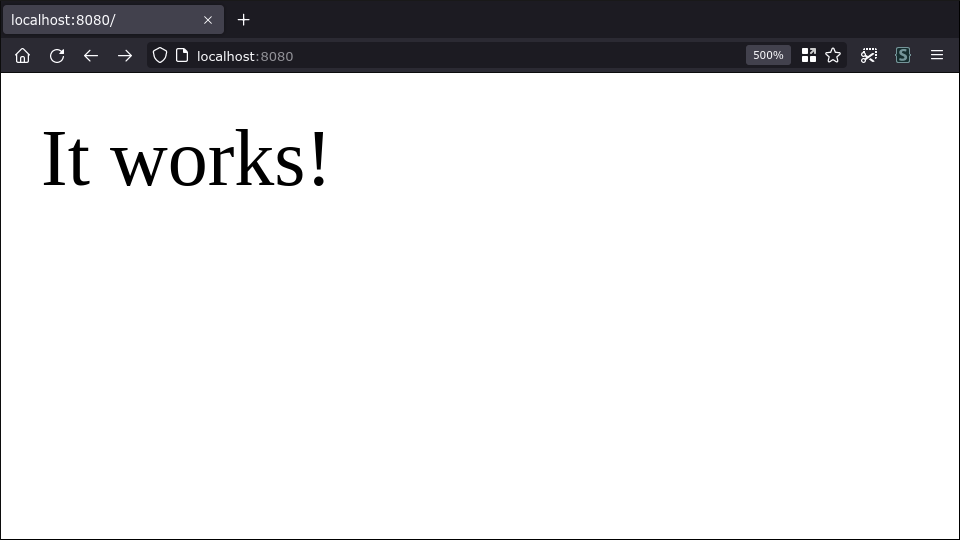

Start your app to test it works:

node app.js

You should be able to visit localhost:8080 in your browser to see the sample response.

Writing a Dockerfile

Now it's time to start Dockerizing your project. First you need an image for your application. Images encapsulate your code and dependencies as a single package that you use to start container instances. The instructions in your Dockerfile define the state of your containers' initial filesystem.

Here's a Dockerfile that works for the sample application:

FROM node:16

WORKDIR /app

COPY package.json .

COPY package-lock.json .

RUN npm ci

COPY app.js .

CMD ["app.js"]

This Dockerfile selects the official Node.js Docker image as its base via the FROM statement. The image inherits everything in the base, then adds additional content via the following instructions.

The working directory is set to /app by the WORKDIR line. The following COPY statements will deposit files into the /app directory inside the container image.

Installing Dependencies

The next stage is to add npm's package.json and run npm ci. This will install your project's npm dependencies - Express in this case - within the container's filesystem.

Don't use COPY node_modules/ . to copy the existing node_modules folder in your project directory - this would prevent you from reusing the Dockerfile in other build environments. Dockerfiles should let you create consistent builds with just the content of your source control repository. If a file or folder's in your .gitignore, it shouldn't be referenced in a Dockerfile COPY instruction.

Copying Application Code

After npm ci has run, your app's code is copied into the image. The placement of this COPY instruction after the RUN, separating it from the previous copies, is deliberate. Each instruction creates a new layer in your image; Docker's build process caches each layer to accelerate subsequent builds. Once the content of one layer changes, the cache of all following layers will be invalidated.

This is why application code should be copied in after npm ci has been executed. The code will usually change much more frequently than the content of your npm lockfile. Image rebuilds that only involve code changes will effectively skip the RUN npm ci stage (and all earlier stages), drastically accelerating the process when you've got a lot of dependencies.

Setting the Image's Command

The final Dockerfile stage uses the CMD instruction to run your app automatically when the container starts. This works because the Node.js base image is configured to use the node process as its entrypoint. The CMD is appended to the inherited entrypoint, resulting in node app.js being run as the foreground process for your new image.

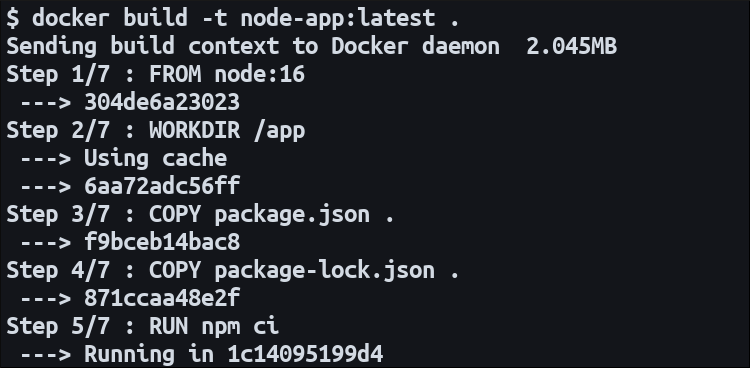

Building Your Image

Next you need to build your image:

docker build -t node-app:latest .

Docker will take the Dockerfile in your working directory, run the instructions within it, and tag the resulting image as node-app:latest. The final . (period) specifies your working directory as the image build context. This determines the paths that can be referenced by the COPY instructions in your Dockerfile.

Build Optimization

One way to improve build performance is to add a .dockerignore file to the root of your project. Give the file the following content:

node_modules/

This file defines paths in your working directory that will not be included in the build context. You won't be able to reference them inside your Dockerfile. In the case of node_modules, this directory's content is irrelevant to the build as we're installing the dependencies anew via the RUN npm ci instruction. Specifically excluding the node_modules already present in your working directory saves having to copy all those files into Docker's temporary build context location. This increases efficiency and reduces the time spent preparing the build.

Starting a Container

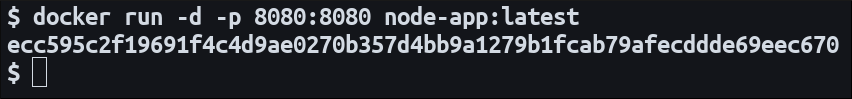

At this point you're ready to run your application using Docker:

docker run -d

-p 8080:8080

--name my-app

--restart on-failure

node-app:latest

The docker run command is used to start a new container instance from a specified image. A few extra flags are added to properly configure the container for the intended use case:

-

-d- Detaches your shell from the container's foreground process, effectively running it as a background server. -

-p- Binds port 8080 on your host to port 8080 inside the container (which our Express sample app was configured to listen on). This means traffic tolocalhost:8080will be passed through to the corresponding container port. You can change the host post to a different value by modifying the first part of the bind definition, such as8100:8080to access your container onlocalhost:8100. -

--name- Assigns the container a friendly name which you can use to reference it in other Docker CLI commands. -

--restart- Selects the restart policy to apply to the container. Theon-failuresetting means Docker will automatically restart the container if it exits with a failure code because your application crashed.

The image built in the previous step is referenced as the final argument to the docker run command. The container ID will be emitted to your terminal window; you should be able to access your Node.js app by visiting localhost:8080 again. This time the server's running inside the Docker container, instead of using the node process installed on your host.

Summary

Docker helps you deploy Node.js web services by containerizing the entire application environment. You can start a container from your image with a single docker run command on any host with Docker installed. This removes the complexity of maintaining Node.js versions, installing npm modules, and monitoring for situations where your application process needs to be restarted.

When you've made code changes and want to launch your update, rebuild your Docker image and remove your old container with docker rm <container-name>. You can then start a replacement instance that uses the revised image.

You might want a slightly different routine in production. Although you can use a regular Docker installation with docker run, this tends to be unwieldy for all but the simplest applications. It's more common to use a tool like Docker Compose or Kubernetes to define container configuration in a file that can be versioned inside your repository.

These mechanisms do away with the need to repeat your docker run flags each time you start a new container. They also facilitate container replication to scale your service and provide redundancy. If you're deploying to a remote host, you'll also need to push your image to a Docker registry so it can be "pulled" from your production machine.

Another production-specific consideration is how you'll route traffic to your containers. Port binds can suffice to begin with but eventually you'll reach a situation where you want multiple containers on one host, each listening on the same port. In this case you can deploy a reverse proxy to route traffic to individual container ports based on request characteristics such as domain name and headers.