Data is one of the most valuable commodities in the world, and it's not hard to see why. From marketing to genomics, the analysis of large sets of data leads to predictive models, which steer to favorable outcomes for the business. The more data you use, the better those models are, which means the better outcomes they can produce. Of course, this means that moving data from one place to another is a crucial skill to have for any engineer, but it's not always as easy as it sounds.

For example, if you use AWS S3 bucket storage, then moving data to another S3 bucket is a single CLI command,

aws s3 cp s3://SourceBucket/* s3://DestinationBucket/

. Moving those same files to a different cloud provider, like Microsoft Azure or Google Cloud Platform, requires an entirely different tool.

By the end of this tutorial, you'll be able to sync files from an AWS S3 bucket to an Azure blob storage container using rclone, an open-source data synchronization tool that works with most cloud providers and local file systems.

Prerequisites

To follow along, you'll need the following:

- An AWS S3 bucket

- An Azure blob storage container

- AWS access keys and Azure storage account access keys

-

A computer running any modern operating system

- Screenshots are from Windows 10 with WSL

- Some files to copy

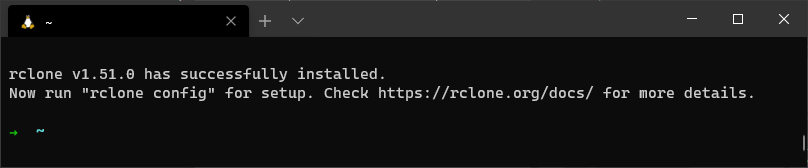

How to Set Up rclone

Installing rclone is different for each operating system, but once it's installed, the instructions are the same: run

rclone config

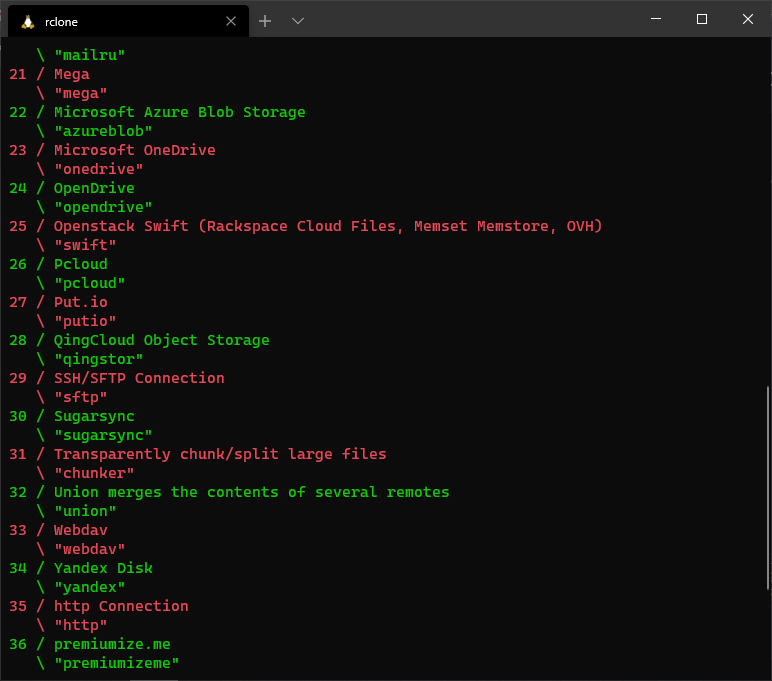

Running the config command will prompt you to link the accounts of your cloud providers to rclone. The rclone term for this is a remote. When you run the config command, enter

n

to create a new remote. You'll need one for both AWS and Azure, but there are several other providers to choose from as well.

Azure

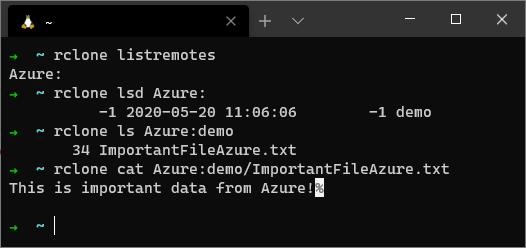

After choosing Azure blob storage, you'll need:

- A name for the remote. (In this demo, it's "Azure.")

- The storage account's name

- One of the storage account access keys

You'll be prompted for a Shared Access Signature URL, and while it's possible to set up using that, this demo is just using an access key. After entering default for the rest of the values by hitting Enter through the rest of the setup, you should be able to start using your remote.

To list the remotes configured on your system, enter

rclone listremotes

, which will show the remotes available. You can also list any blob storage containers by running

rclone lsd <remote_name>:

. Make sure to include a

:

at the end of the remote when running these commands because that is how rclone determines if you want to use a remote or not. You can run

rclone --help

at anytime to get the list of available commands.

Using the

ls

and

cat

commands with an rclone remote.

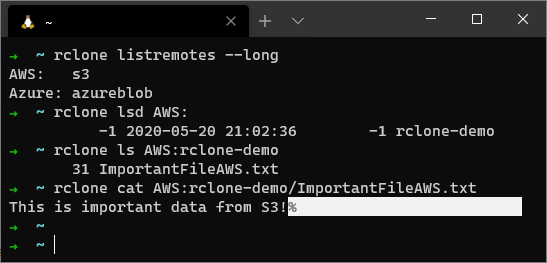

AWS S3

Setting up the remote for an S3 bucket is very similar to the Azure blob storage container, just with a few small differences. Because there are other cloud storage providers that are considered S3 compatible by rclone, you may also get a few extra prompts when running

rclone config

. You'll need:

- A name for the remote. (In this demo, it's "AWS.")

- An AWS access key and corresponding secret access key

- The AWS region that the bucket is in

The rest of the prompts can be configured to create other buckets or perform other operations, but for copy, you can skip the rest of them by hitting Enter.

If the user the access keys belong to has access to the bucket, you will have access to it with the same commands you used to access the Azure remote.

You can confirm the type of remote by adding the

--long

flag to the

rclone listremotes

command.

Running rclone

Now that the remotes have been configured, you can transfer files, create new buckets, or manipulate the files in any way you need to using a standard set of commands. Instead of relying on knowing how to work with the AWS S3 CLI or Azure PowerShell, you can communicate between both storage buckets with rclone.

Some common useful commands to get you started are:

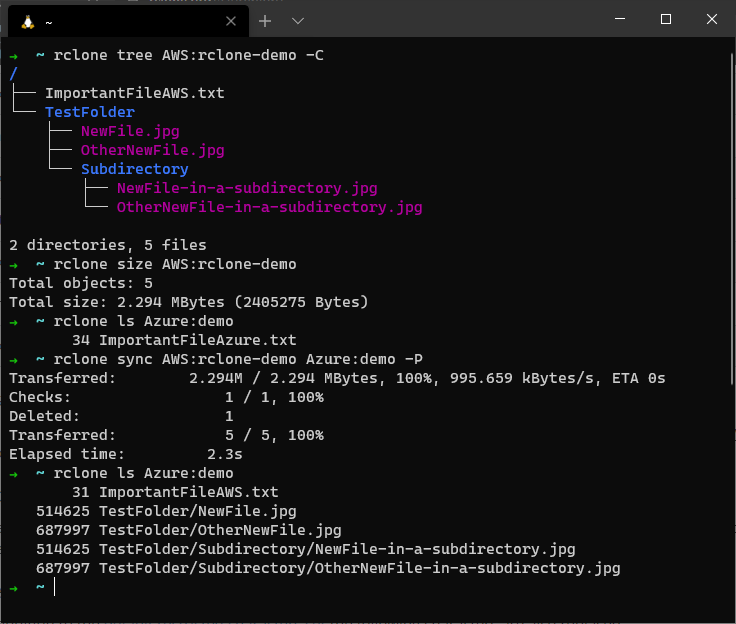

-

rclone tree <Remote>:<BucketName>-C -

rclone size <Remote>:<BucketName> -

rclone sync <Source> <Target>-P

In the following example, the AWS S3 bucket is synced to the Azure remote, which deletes the existing file in Azure and then copies the data from S3. If you need to keep the files in the target folder, then use the

rclone copyto

command.

Summary

By now, you should be comfortable installing rclone and configuring remotes, as well as using those remotes to copy data between different clouds. rclone is an extremely flexible tool and isn't just limited to AWS and Azure, so if you use another cloud provider, try setting up remotes for them as well.