Quick Links

Minio is a self-hosted object storage system that's compatible with the Amazon S3 API interfaces. In this guide, we'll use Minio to set up shared caching for GitLab Runner as an alternative to a cloud-hosted object storage solution.

What's The Problem?

GitLab CI pipelines support a

cache

to accelerate future runs. Caches are stored locally on each runner instance by default. You can use an object storage provider instead to enable "shared" cache mode.

Shared caching ensures multiple jobs can access the cache simultaneously. Concurrent access isn't supported when you're using local caching with the Docker pipeline executor. This can cause reduced pipeline performance due to missed cache hits.

Although the cache restoration is intended to be on a "best effort" basis, meaning your jobs shouldn't require a cache include content from an earlier job, in practice many people do use the

cache

field to pass data between their jobs. This doesn't currently work when you have multiple jobs that run in parallel and try to restore the same cache.

Self-hosting a Minio installation alongside your GitLab instance lets you benefit from more reliable cache restoration that still works predictably with parallel jobs. Here's how to install Minio and configure GitLab Runner to use it for caching.

Installing Minio

Minio Server can be downloaded as a standalone binary or

.deb

and

.rpm

packages. We're focusing on the Debian package in this guide. If you use the binary directly, you'll need to manually add the Minio service script to your

init

implementation to make Minio start with your machine.

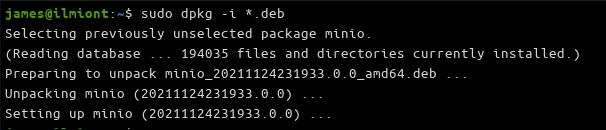

Download and install Minio's

.deb

using the instructions on the website to get the latest version. This will add the Minio server to your system and register its service definition.

Minio expects to execute as the minio-user user. Create this user account now:

sudo useradd -r minio-user -s /sbin/nologin

Next create a Minio data directory. All the files that are uploaded to your object store will be saved to this location. We're using /mnt/minio for the purposes of this tutorial. Use chown to give your minio-user ownership of the directory.

sudo mkdir -p /mnt/minio

sudo chown -R minio-user:minio-user /mnt/minio

sudo chmod -R 0775 /mnt/minio

Creating a Minio Config File

Minio automatically loads config values from the /etc/default/minio file. Create this file now and add the following content:

MINIO_ROOT_USER="minio"

MINIO_ROOT_PASSWORD="P@$$w0rd"

MINIO_OPTS="--address :9600 --console-address :9601"

MINIO_VOLUMES="/mnt/minio"

The first two lines define credentials for Minio's initial root user. Change the password to a secure value.

The MINIO_OPTS line provides Minio server settings. We're explicitly setting the listening port to 9600 (the default) and exposing Minio's web console on 9601. The MINIO_VOLUMES directive defines where Minio will store your data. This is set to the directory set up earlier.

Change the ownership of your config file to minio-user next. Minio might fail to start if the ownership's incorrect:

sudo chown minio-user:minio-user /etc/default/minio

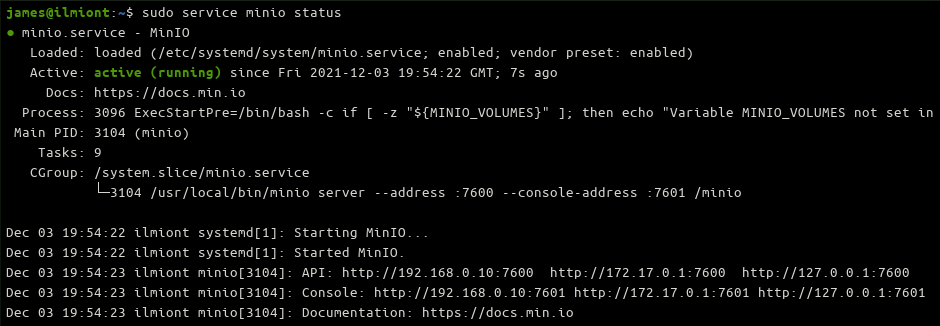

Start the Minio service and access the web console in your browser at localhost:9601. Use your root user credentials to login.

sudo service minio start

Configuring Minio for GitLab

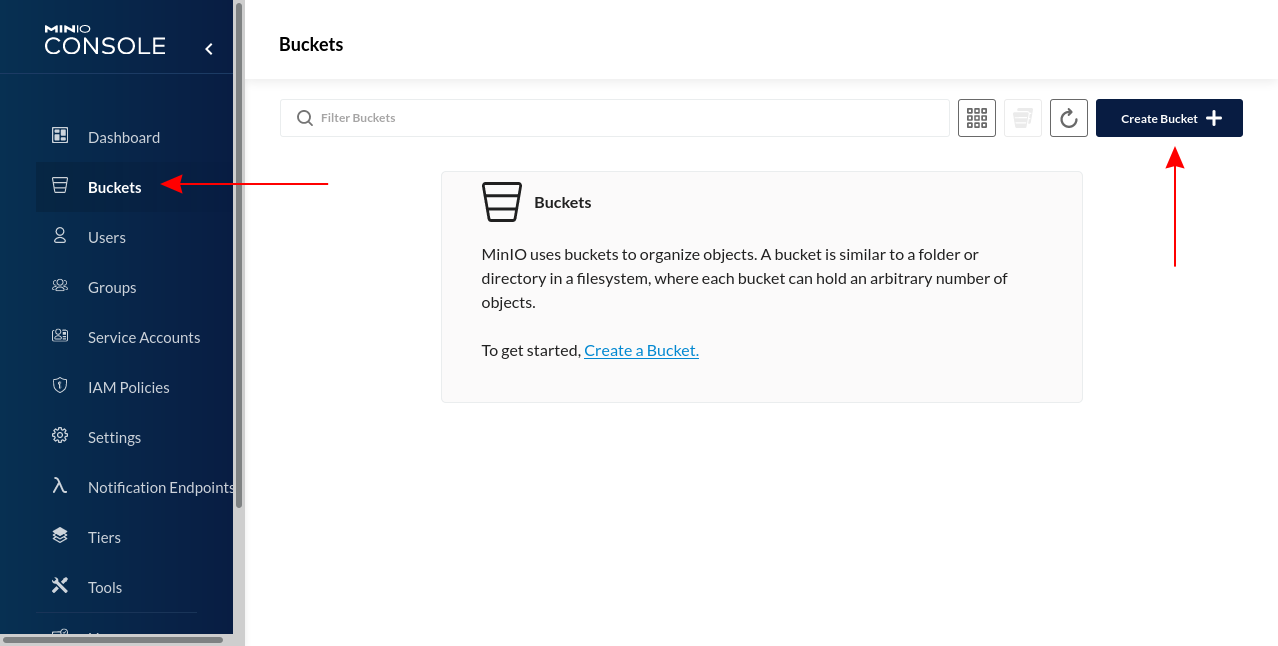

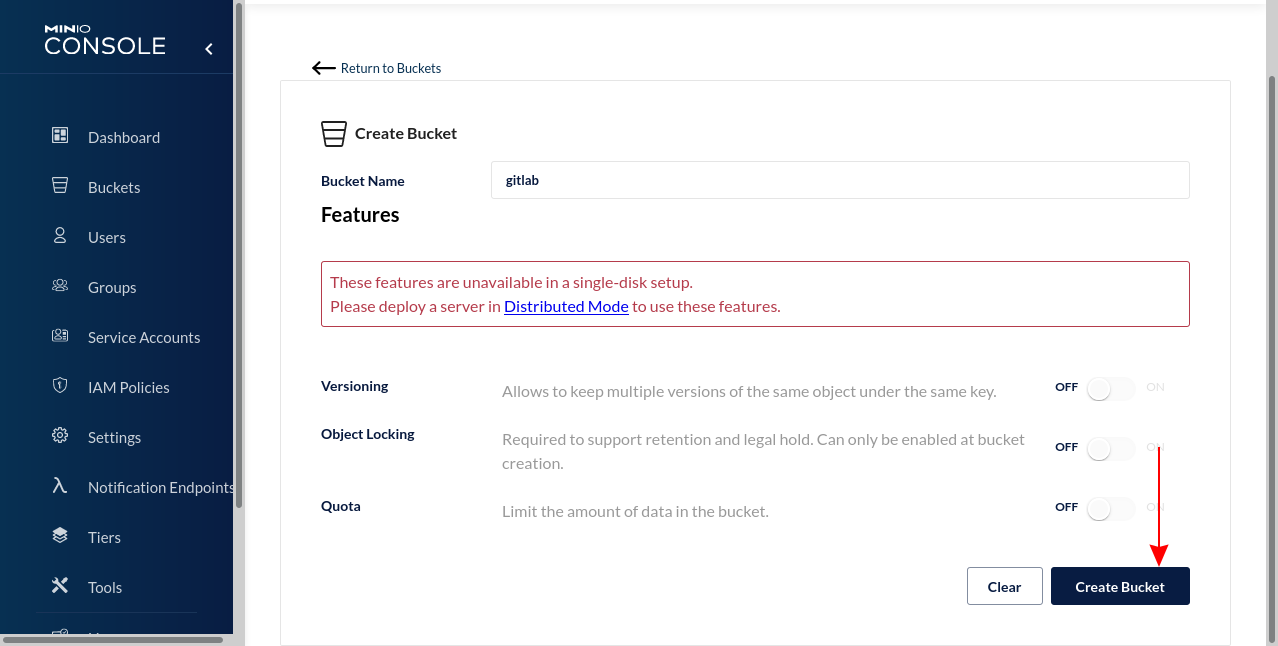

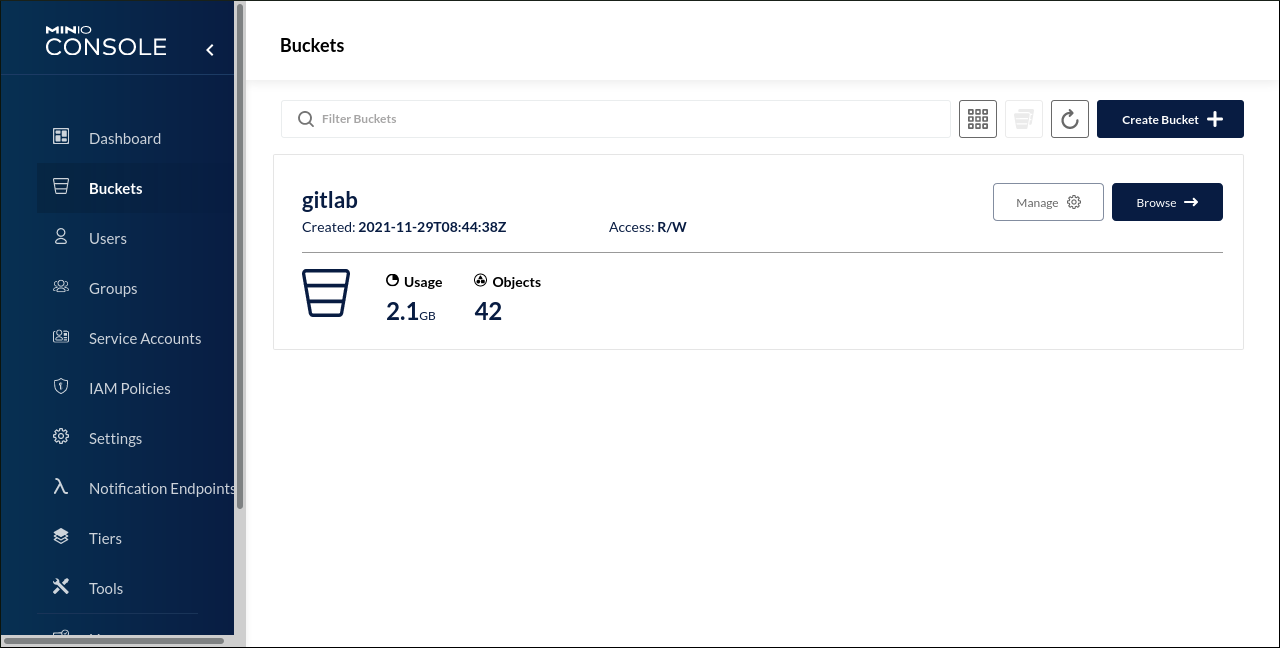

The next step is creating a bucket for GitLab to upload pipeline caches to. Click the "Buckets" link in the Minio Console sidebar, then the blue "Create Bucket" button in the top-right.

Give your bucket a name and click the "Create Bucket" button in the bottom-right. None of the offered features will be available in this simplistic Minio installation using a single local disk. They're not needed as the bucket content will be managed by GitLab Runner.

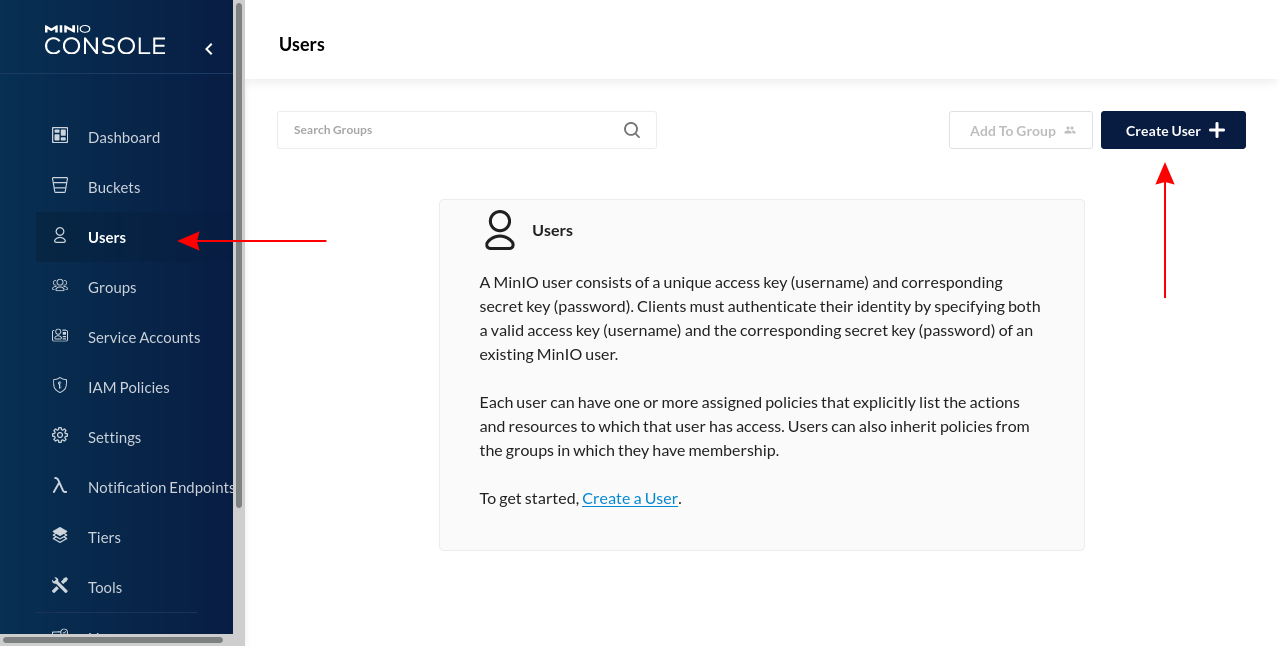

Once you've created your bucket, head to the Minio Console's "Users" page. Click "Create User" to add a user account for GitLab Runner. While not strictly required, this is a best practice step so you don't need to supply GitLab with your root user credentials.

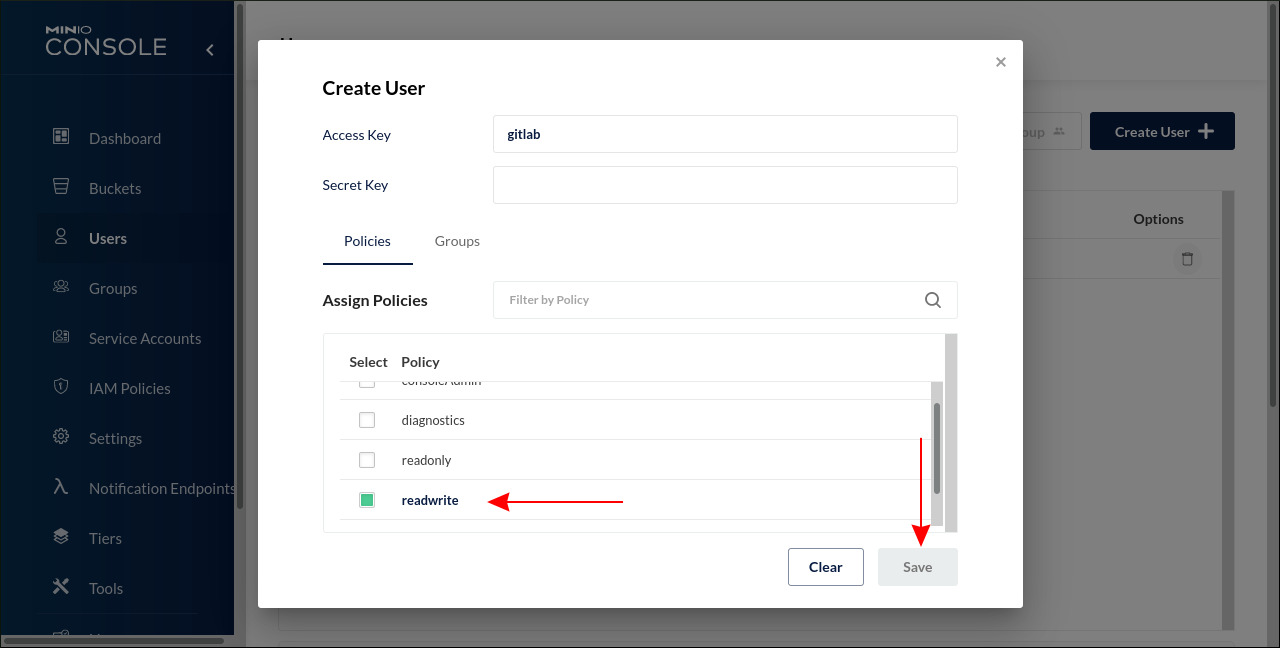

Type a username, such as gitlab, into the "Access Key" field. Supply a secure password to the "Secret Key" field. Assign the new user the readwrite policy from the list, then click the "Save" button. GitLab requires read-write access so it can upload and retrieve your cached data.

Configuring GitLab Runner

Now you can integrate Minio into GitLab Runner. Open your GitLab Runner config file; this is usually found at /etc/gitlab-runner/config.toml for GitLab omnibus installations.

Find the [runners.cache] section and add the following lines:

[runners.cache]

Type = "s3"

Shared = true

[runners.cache.s3]

AccessKey = "gitlab"

SecretKey = "P@$$w0rd"

BucketName = "gitlab"

Insecure = true

ServerAddress = "192.168.0.1:9600"

Here's the effect of these values:

-

Type- Informs GitLab that an S3-like storage engine is to be used. -

Shared- Enables the cache to be shared across concurrent jobs. -

AccessKeyandSecretKey- These should match the credentials for the Minio user account you created in the Minio Console. -

BucketName- Change this to the name of the bucket you created in the Minio Console. -

Insecure- Enables GitLab to access Minio over plain HTTP. If you'll be using your Minio server for additional purposes, you should follow the docs to set up HTTPS for your installation. -

ServerAddress- Add your server's IP address here. This informs GitLab of the S3 server's connection details.

Restart the GitLab Runner service to ensure your changes take effect:

sudo gitlab-runner restart

Run Your CI Jobs

Your CI jobs should now pull and push caches defined in your .gitlab-ci.yml up to your Minio server. This enables use of shared caches that can be accessed painlessly by parallel jobs.

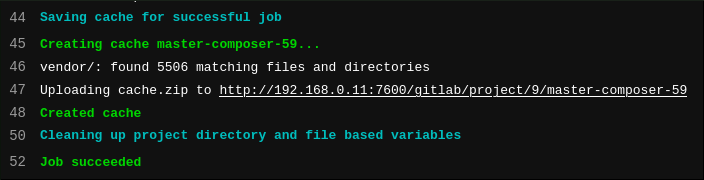

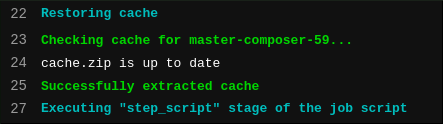

When everything's working, you'll see an Uploading cache.zip line in your CI job logs that demonstrates GitLab's using your object storage server. Subsequent jobs that reference the cache should include cache.zip is up to date and successfully extracted cache near the top of the log.

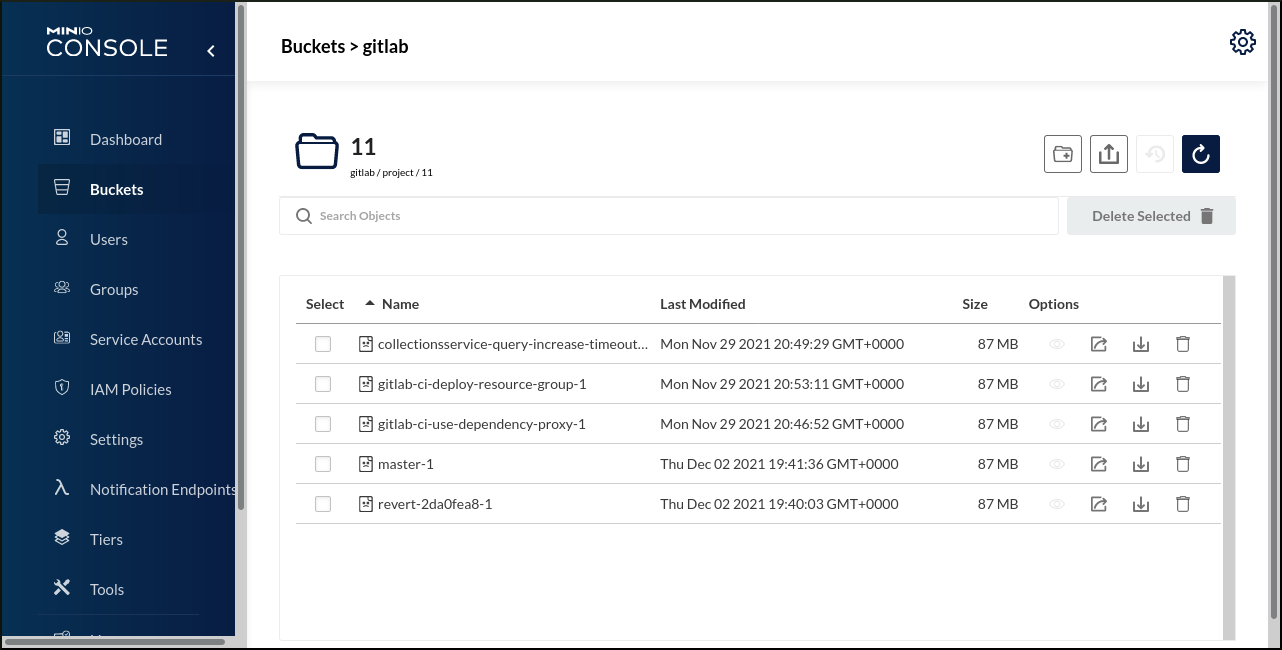

You can browse currently stored caches by inspecting your bucket in the Minio Console. This also gives you a convenient way to check disk utilization and delete old caches to free up space. GitLab creates a directory for each of your project IDs. Within that folder you'll find ZIP archives containing your caches, each one named by branch and the cache name given in your .gitlab-ci.yml.

Conclusion

Minio is easily configured as a basic installation that works as a shared cache for GitLab Runner. This ensures the cache is used reliably when multiple jobs run concurrently. It's a worthwhile addition to your self-hosted GitLab installation.

While in many cases shared caching will improve performance, beware this may not be universally true. It adds additional overheads to the pipeline process as GitLab Runner needs to compress your job's cache and upload it to Minio before recording a successful result. When a later job restores the cache, the ZIP will need to be pulled from Minio and decompressed before your script can begin. It's worth monitoring your own pipelines after switching to object storage to check you're getting the results you expected.