With the rising demand for HPC and AI-powered cloud applications comes a need for very powerful datacenter GPUs. Usually NVIDIA is the king of this field, but AMD's latest MI100 GPU presents some serious competition.

A Card For The HPC Market

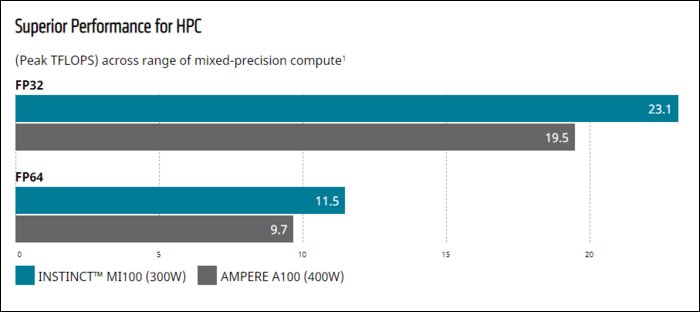

The card is fast, seriously fast. NVIDIA's high end A100 GPU peaks at 9.7 TFLOPS in FP64 workloads. The new "AMD Instinct MI100" leaps past that at 11.5 TFLOPS.

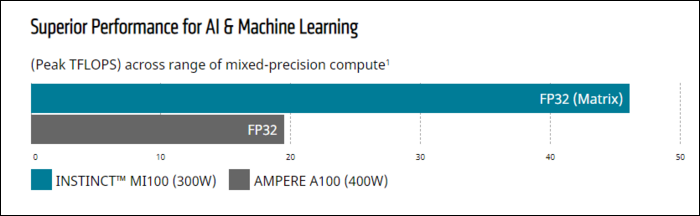

Of course, NVIDIA's cards support other speedup techniques for AI-specific workloads in different number formats, such as the TensorFloat-32 precision format and fine-grained structured sparsity. For AI and Machine Learning workloads, NVIDIA is still king, as their cards are built specifically for tensor-based operations.

But, for general purpose High Performance Computing, the MI100 takes the crown for raw compute power. Plus, it's nearly half the price, and is much more efficient per watt.

On top of the other improvements, the new architecture also brings mixed-precision improvements, with their "Matrix Core" technology delivering 7x greater FP16 performance compared to their prior generation of cards.

AMD CPUs and Instinct GPUS are both powering two of the US Department of Energy's exascale supercomputers. The "Frontier" supercomputer is planned to be built next year with current Epyc CPUs and MI100s, and will deliver more than 1.5 exaflops of peak computing power. The "El Capitan" supercomputer is planned to be built in 2023 on next gen hardware, and will deliver more than 2 exaflops of double precision power.

Can ROCm Live Up to CUDA?

Of course, all of this power is useless if the software doesn't support it. It's no secret that NVIDIA has managed to make machine learning a bit of a walled garden.

NVIDIA's compute framework is called CUDA, or Compute Unified Device Architecture. It's proprietary, and only works with their cards. But since their cards have historically been the fastest, many applications are only built with CUDA support first and foremost.

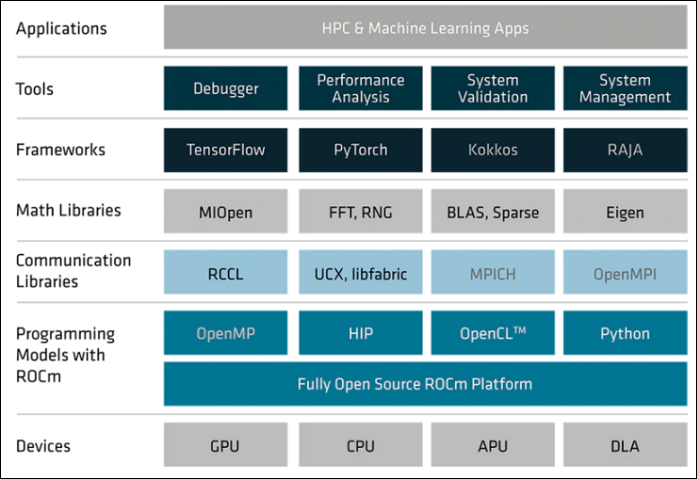

There are cross-platform programming models, most notably OpenCL, which AMD supports very well with their ROCm platform. Both NVIDIA cards and AMD cards support OpenCL, but because NVIDIA only supports it by transpiling to CUDA, it's actually slower to use OpenCL with an NVIDIA card. Because of this, not all applications will support it.

Ultimately, you'll need to do your own research and see if the application you intend to run can be run on AMD cards, and maybe be prepared for some tinkering and bug fixing. NVIDIA GPUs on the otherhand are mostly plug and play, so even if AMD is faster, NVIDIA can continue to hinder them with closed-source software.

However, this situation is getting better---AMD is committed to open sourcing everything and creating an open environment. Tensorflow and PyTorch, two very popular ML frameworks, both support the ROCm ecosystem.

Hopefully the raw specs of AMD's latest offerings can push the industry to a more competitive environment. After all, they're being put to use in supercomputers