The completed version of the Stable Diffusion model can generate images with artificial intelligence right on your computer. There's now a modified version that is perfect for creating repeating patterns.

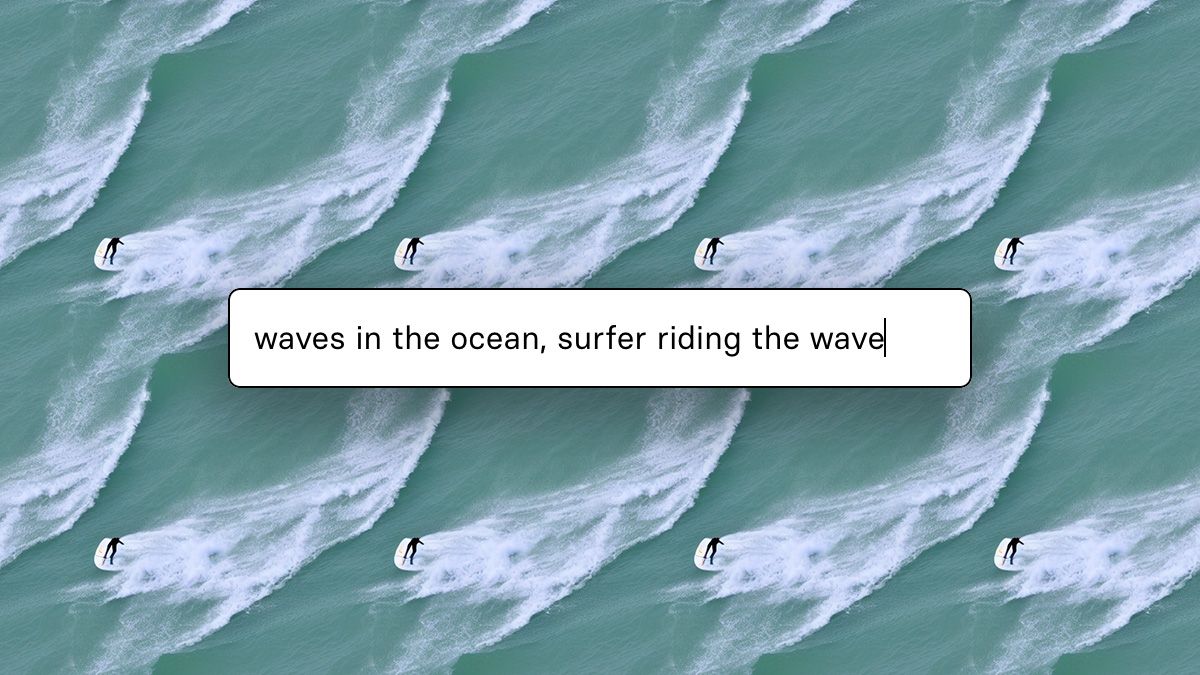

"Tileable Stable Diffusion," developed by Thomas Moore, is a modified version of the same Stable Diffusion model that can be used to generate incredible AI art and lifelike images. The main difference is that this fork (meaning software that has split off from another codebase) is designed to generate images that can be used in seamless repeating patterns.

Perhaps the best use for this is creating textures for objects in video games, especially since the grid size can be customized to match the requirements for a specific project or game engine. Patterns can also come in handy for device wallpapers, website backgrounds (especially if you're going for a 90's aesthetic), fill patterns in Photoshop, or even wallpaper for an actual real-life room.

According to Moore, the modified model "uses a circular convolution, so the model sees the image as if all the parallel edges were connected. Like a tube, but in both directions to form a torus." The result is that each pattern doesn't have a discernable edge when repeated in a tile pattern, just like game textures are supposed to work.

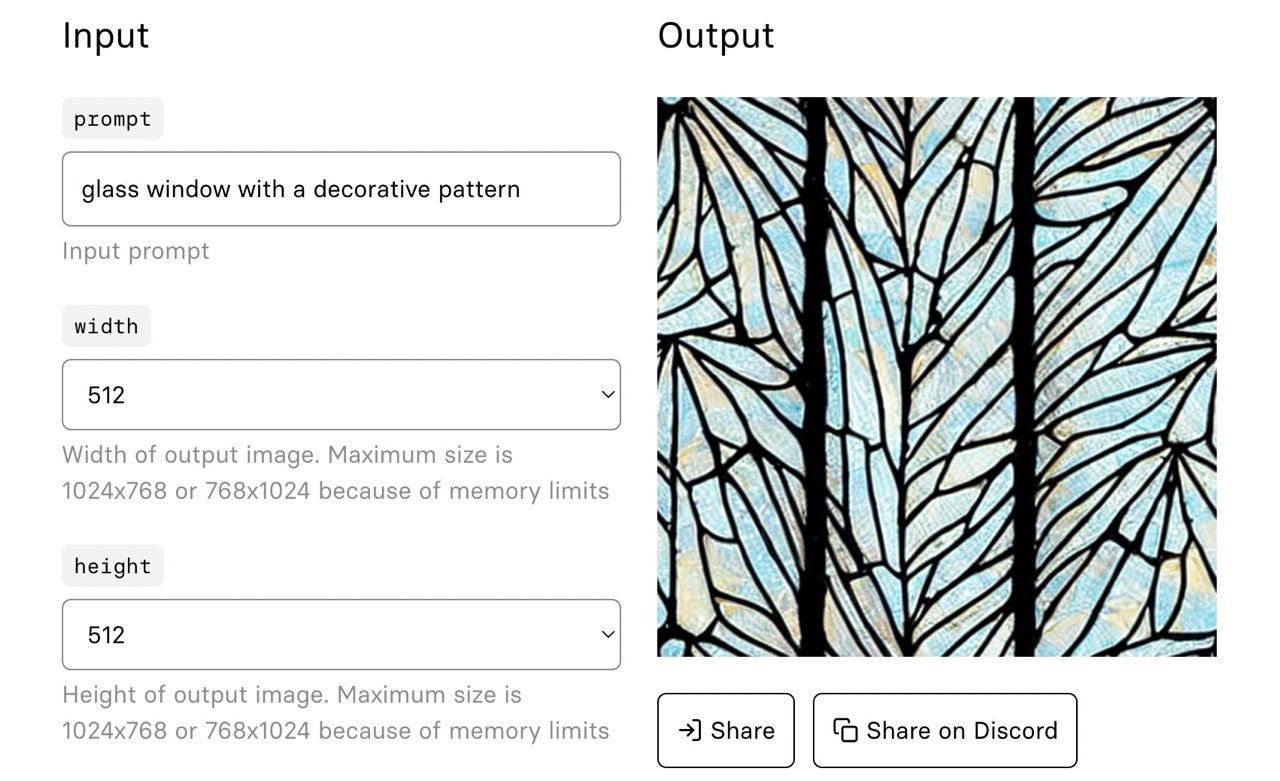

You can try out the model in your web browser to generate images, powered by Nvidia T4 graphics cards in remote servers, or you can run it locally on your PC with the code from the GitHub repository.

Source: @ReplicateHQ