Quick Links

Key Takeaways

The wget command is meant primarily for downloading webpages and websites, and, compared to cURL, doesn't support as many protocols. cURL is for remote file transfers of all kinds rather than only websites, and it also supports additional features like file compression.

If you ask a bunch of Linux users what they download files with, some will say wget and others will say cURL. What's the difference, and is one better than the other?

The History of wget and cURL

Government researchers were starting to connect different networks together as far back as the 1960s, giving rise to inter-connected networks. But the birth of the internet as we know it came about on Jan. 1st, 1983 when the TCP/IP protocol was implemented. This was the missing link. It allowed disparate computers and networks to communicate using a common standard.

In 1991, CERN released their World Wide Web software which they had been using internally for a few years. The interest in this visual overlay for the internet was immediate and widespread. By the close of 1994, there were 10,000 web servers and 10 million users.

These two milestones---the internet and the web---represent very different faces of connectivity. But they share a lot of the same functionality, too.

Connectivity means just that. You're connecting to some remote device, such as a server. And you're connecting to it because there is something on it that you need or want. But how do you retrieve that remotely-hosted resource to your local computer, from the Linux command line?

In 1996, two utilities were born that allow you to download remotely-hosted resources. They are wget, which was released in January, and cURL which was released in December. They both operate on the Linux command line. They both connect to remote servers, and they both retrieve stuff for you.

But this isn't just the usual case of Linux providing two or more tools to do the same job. These utilities have different purposes and different specialisms. The trouble is, they're similar enough to cause confusion about which one to use, and when.

Consider two surgeons. You probably don't want an eye surgeon performing your heart bypass surgery, nor do you want the heart surgeon doing your cataract operation. Yes, they're both highly-skilled medical professionals, but that doesn't mean they're drop-in replacements for one another.

The same is true for wget and cURL.

wget and cURL Compared

The "w" in the wget command is an indicator of its intended purpose. Its primary purpose is to download webpages---or even entire websites. Its

man

page describes it as a utility to download files from the Web using the HTTP, HTTPS, and FTP protocols.

By contrast, cURL works with 26 protocols, including SCP, SFTP, and SMSB as well as HTTPS. Its

man

page says it is a tool for transferring data to or from a server. It isn't tailored to work with websites, specifically. It is meant for interacting with remote servers, using any of the many internet protocols it supports.

So, wget is predominantly website-centric, while cURL is something that operates at a deeper level, down at the plain-vanilla internet level.

wget is able to retrieve webpages, and it can recursively navigate entire directory structures on webservers to download entire websites. It's also able to adjust the links in the retrieved pages so that they correctly point to the webpages on your local computer, and not to their counterparts on the remote webserver.

cURL lets you interact with the remote server. It can upload files as well as retrieve them.

cURL

works with SOCKS4 and SOCKS5 proxies, and HTTPS to the proxy. It supports the automatic decompression of compressed files in the GZIP, BROTLI, and ZSTD formats. cURL also lets you download multiple transfers in parallel.

The overlap between them is that wget and cURL both let you retrieve webpages, and use FTP servers.

It's only a rough metric, but you can get some appreciation of the relative feature sets of the two tools by looking at the length of their

man

pages. On our test machine, the man page for wget is 1433 lines long. The

man

page for cURL is a whopping 5296 lines.

A Quick Peek at wget

Because wget is a part of the GNU project, you should find it preinstalled on all Linux distributions. Using it is simple, especially for its most common uses: downloading webpages or files.

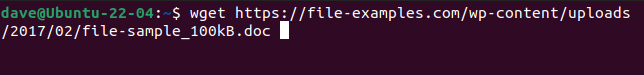

Just use the wget command with the URL to the webpage or remote file.

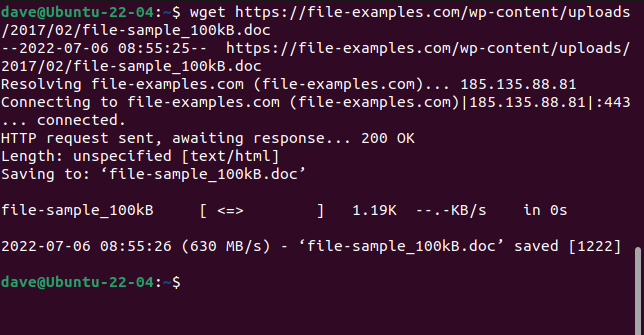

wget https://file-examples.com/wp-content/uploads/2017/02/file-sample_100kB.doc

The file is retrieved and saved on your computer with its original name.

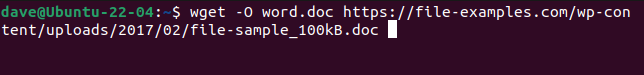

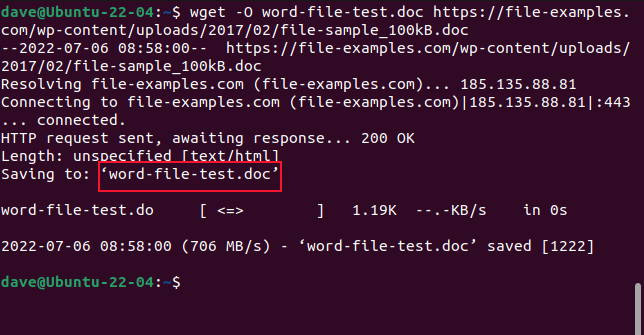

To have the file saved with a new name, use the -O (output document) option.

wget -O word-file-test.doc https://file-examples.com/wp-content/uploads/2017/02/file-sample_100kB.doc

The retrieved file is saved with our chosen name.

Don't use the -O option when you're retrieving websites. If you do, all the retrieved files are appended into one.

To retrieve an entire website, use the -m (mirror) option and the URL of the website's home page. You'll also want to use --page-requisites to make sure all supporting files that are required to properly render the webpages are downloaded too. The --convert-links option adjusts links in the retrieved file to point to the correct destinations on your local computer instead of external locations on the website.

A Quick Peek at cURL

cURL is an independent open-source project. It is pre-installed on Manjaro 21 and Fedora 36 but had to be installed on Ubuntu 21.04.

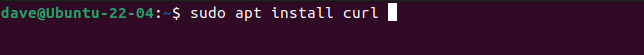

This is the command to install cURL on Ubuntu.

sudo apt install curl

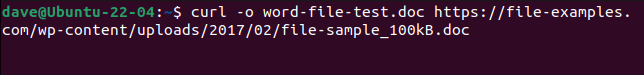

To download the same file as we did with wget, and to save it with the same name, we need to use this command. Note that the -o (output) option is lowercase with cURL.

curl -o word-file-test.doc https://file-examples.com/wp-content/uploads/2017/02/file-sample_100kB.doc

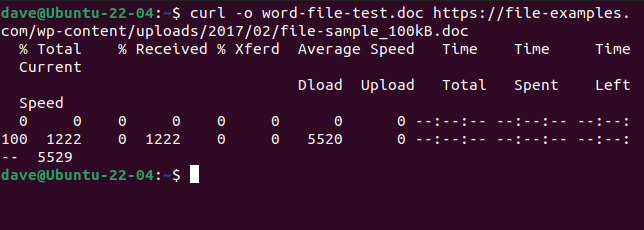

The file is downloaded for us. An ASCII progress bar is displayed during the download.

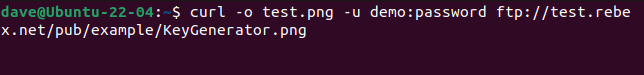

To connect to an FTP server and download a file, use the -u (user) option and provide a user name and password pair, like this:

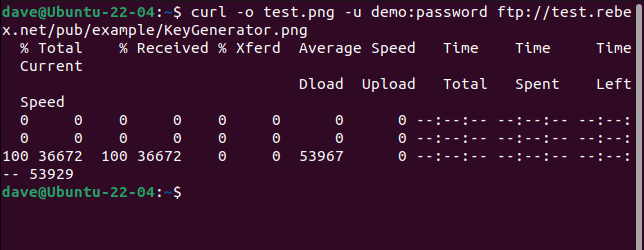

curl -o test.png -u demo:password ftp://test.rebex.net/pub/example/KeyGenerator.png

This downloads and renames a file from a test FTP server.

There Is No Best

It's impossible to answer "Which one should I use" without asking "What are you trying to do?"

Once you understand what wget and cURL do, you'll realize they're not in competition. They don't satisfy the same requirement and they're not trying to provide the same functionality.

Downloading webpages and websites is where wget's superiority lies. If that's what you're doing, use wget. For anything else---uploading, for example, or using any of the multitudes of other protocols---use cURL.