Quick Links

OpenAI's DALL-E 2 has come as a shock to those who thought that artificial intelligence would never (or at least not quickly) start to infiltrate the realm of creativity. But is DALL-E 2 here to take artists' jobs?

How Does DALL-E 2 Work?

DALL-E 2 is so impressive it almost seems like magic, but the broad details of how it creates such stunning, realistic images aren't that hard to understand.

There are two main components to DALL-E 2. The first is GPT-3, which is arguably the most advanced natural language machine learning algorithm in the wild today. DALL-E 2 also uses another OpenAI model known as CLIP (Contrastive Language-Image Pre-training).

GPT-3 and CLIP allow a computer to understand and generate sophisticated natural language. By training the DALL-E neural net with billions of images and their natural language descriptions from (mainly) the internet, it learns the relationships between concepts.

In a sense, DALL-E is the reverse of a common machine learning practice, where you provide an image and the AI attempts to describe what it sees.

Think of that infamous "Not a Hotdog" app from the TV show Silicon Valley. The difference here is that instead of asking the AI whether the picture is a hotdog or not, you're describing the hotdog and it's generating an entirely original hotdog image based on everything it's learned about them.

The second major part of DALL-E is how it generates images. It uses a method known as "diffusion." Specifically, the understanding of an image's description in human language that has been created, is turned into an image using an OpenAI model named GLIDE. GLIDE takes an image consisting of randomly-generated noise and then gradually strips away that noise until it matches the image as described in natural language. It's somewhat reminiscent of a sculptor starting with a block of marble and chipping away until only a statue remains.

For a much more technical and detailed description of DALL-E 2 under the hood, we heartily recommend the DALL-E 2 explainer on the AssemblyAI deep learning blog.

Why DALL-E 2 Is So Disruptive

DALL-E 2 is far from the first machine learning software that can generate images. There have been many prior systems, and DALL-E 2 builds on the lessons learned by those other projects. So why does this time feel like a disruptive turning point?

One significant reason is that the images DALL-E and DALL-E 2 make are aesthetically pleasing. Other AI image generation systems often create images that people describe as disturbing or like something from a dream. It's a little like the Uncanny Valley, but for the visual arts. DALL-E 2 creates images that clearly have an artistic eye or some sense of aesthetics behind them.

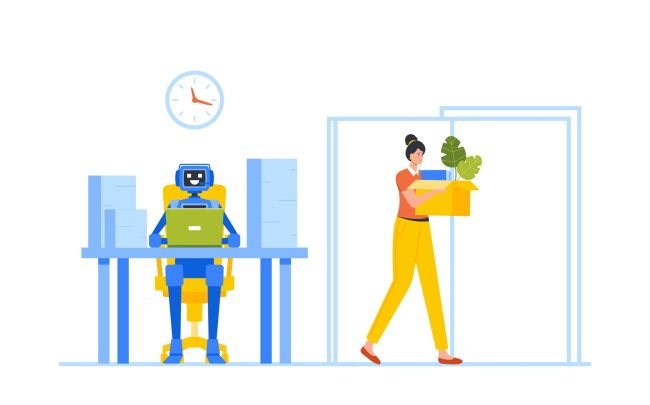

So the images that DALL-E 2 creates are comparable to those made by talented artists or photographers who have spent a lifetime developing their sense of aesthetics. It's not hard to imagine someone like that looking at the images that DALL-E 2 can spit out in seconds and feel like they're about to become irrelevant.

Not only can the system make beautiful high-resolution images in seconds from natural language prompts, but it can also tweak and edit those images, or provide multiple variations of an existing image---even one that the user provides. So does this mean that artists should pack up their easels and drawing tablets and "learn to code" instead?

DALL-E 2 Means Artists Will Change, Not Disappear

OpenAI has been very careful about simply releasing its technology to the world. This is sensible since there's clearly much scope for abuse. Yet, now that they've shown that it can be done, it will be no time at all before commercial or independent AI researchers replicate what DALL-E does and makes it available to everyone. Big players in the machine learning space have their own high-performance AI artists waiting in the wings, too---like Google's Imagen.

Since Pandora's box can't be closed, we'll have to accept that the world of visual arts is going to irrevocably change, but that doesn't mean artists are a thing of the past.

One way to look at it is that technology like this put the power to generate art in the hands of anyone. The emphasis now moves from the technical ability to create images to the ability to accurately describe and iterate your vision, until what you see on screen matches what you had in mind. In other words, more people will now have the ability to express themselves visually, just as more people can now do accurate calculations thanks to the existence of calculators.

Certain types of artists may no longer have viable business models. If you're making a living doing commissions for a fee, it's hard to compete with a program that can make 100s of images an hour based on a client's description and can make changes to those images almost instantly. Instead, you may want to use these tools to realize your own vision, and then sell those unique images based on your sensibilities.

The Customer Is Always Right

It's also important to remember that ultimately these images are created for human consumption. We humans have our own set of values that go beyond convenience and technical superiority. In a world where generated art is abundant and therefore relatively cheap and disposable, there will always be an audience willing to appreciate (and buy) human-made art, simply because it may be a relative rarity.

In other words, software like DALL-E 2 might spell the end for artists who make a living churning out assembly-line artwork, but it's unlikely to dampen the prospects for artists who have something to say and unique visual identity through which to speak.