Quick Links

The Linux

set

and pipefail commands dictate what happens when a failure occurs in a Bash script. There's more to think about than should it stop or should it carry on.

Bash Scripts and Error Conditions

Bash shell scripts are great. They're quick to write and they don't need compiling. Any repetitive or multi-stage action that you need to perform can be wrapped in a convenient script. And because scripts can call any of the standard Linux utilities, you're not limited to the capabilities of the shell language itself.

But problems can arise when you call an external utility or program. If it fails, the external utility will close down and send a return code to the shell, and it might even print an error message to the terminal. But your script will carry on processing. Perhaps that's not what you wanted. If an error occurs early in the execution of the script it might lead to worse issues if the rest of the script is allowed to run.

You could check the return code from each external process as they complete, but that becomes difficult when processes are piped into other processes. The return code will be from the process at the end of the pipe, not the one in the middle that failed. Of course, errors can occur inside your script too, such as trying to access an uninitialized variable.

The

set

and

pipefile

commands let you decide what happens when errors like these occur. They also let you detect errors even when they happen in the middle of a pipe chain.

Here's how to use them.

Demonstrating the Problem

Here's a trivial Bash script. It echoes two lines of text to the terminal. You can run this script if you copy the text into an editor and save it as "script-1.sh."

#!/bin/bash

echo This will happen first

echo This will happen second

To make it executable you'll need to use chmod:

chmod +x script-1.sh

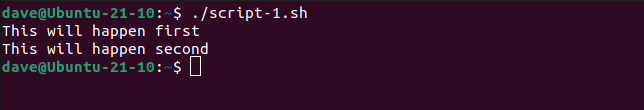

You'll need to run that command on each script if you want to run them on your computer. Let's run the script:

./script-1.sh

The two lines of text are sent to the terminal window as expected.

Let's modify the script slightly. We'll ask ls to list the details of a file that doesn't exist. This will fail. We saved this as "script-2.sh" and made it executable.

#!/bin/bash

echo This will happen first

ls imaginary-filename

echo This will happen second

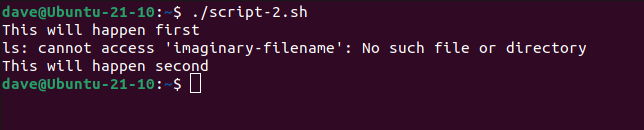

When we run this script we see the error message from ls .

./script-2.sh

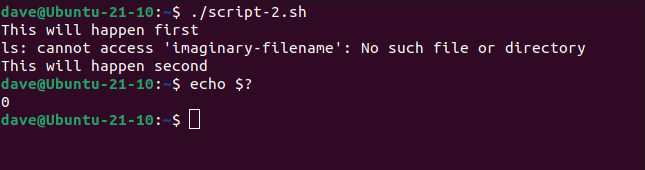

Although the ls command failed, the script continued to run. And even though there was an error during the script's execution, the return code from the script to the shell is zero, which indicates success. We can check this using echo and the $? variable which holds the last return code sent to the shell.

echo $?

The zero that gets reported is the return code from the second echo in the script. So there are two issues with this scenario. The first is the script had an error but it carried on running. That can lead to other problems if the rest of the script expects or depends on the action that failed actually succeeded. And the second is that if another script or process needs to check the success or failure of this script, it'll get a false reading.

The set -e Option

The set -e (exit) option causes a script to exit if any of the processes it calls generate a non-zero return code. Anything non-zero is taken to be a failure.

By adding the set -e option to the start of the script, we can change its behavior. This is "script-3.sh."

#!/bin/bash

set -e

echo This will happen first

ls imaginary-filename

echo This will happen second

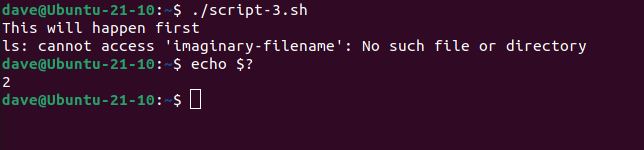

If we run this script we'll see the effect of set -e.

./script-3.sh

echo $?

The script is halted and the return code sent to the shell is a non-zero value.

Dealing With Failures in Pipes

Piping adds more complexity to the problem. The return code that comes out of a piped sequence of commands is the return code from the last command in the chain. If there's a failure with a command in the middle of the chain we're back to square one. That return code is lost, and the script will carry on processing.

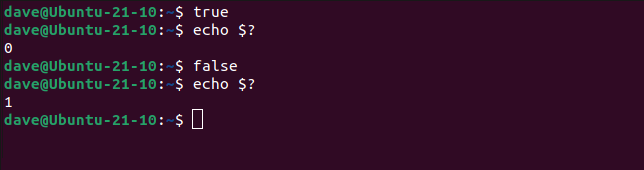

We can see the effects of piping commands with different return codes using the true and false shell built-ins. These two commands do no more than generate a return code of zero or one, respectively.

true

echo $?

false

echo $?

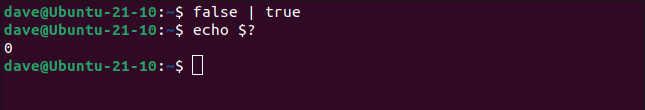

If we pipe false into true ---with false representing a failing process---we get true's return code of zero.

false | true

echo $?

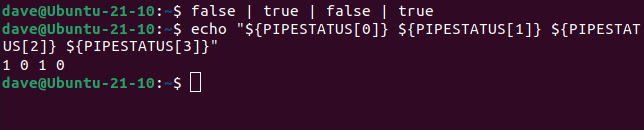

Bash does have an array variable called PIPESTATUS, and this captures all of the return codes from each program in the pipe chain.

false | true | false | true

echo "${PIPESTATUS[0]} ${PIPESTATUS[1]} ${PIPESTATUS[2]} ${PIPESTATUS[3]}"

PIPESTATUS only holds the return codes until the next program runs, and trying to determine which return code goes with which program can get very messy very quickly.

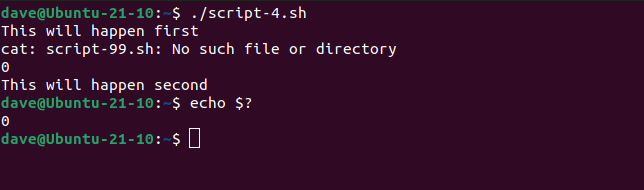

This is where set -o (options) and pipefail come in. This is "script-4.sh." This will try to pipe the contents of a file that doesn't exist into wc.

#!/bin/bash

set -e

echo This will happen first

cat script-99.sh | wc -l

echo This will happen second

This fails, as we'd expect.

./script-4.sh

echo $?

The first zero is the output from wc, telling us it didn't read any lines for the missing file. The second zero is the return code from the second echo command.

We'll add in the -o pipefail , save it as "script-5.sh", and make it executable.

#!/bin/bash

set -eo pipefail

echo This will happen first

cat script-99.sh | wc -l

echo This will happen second

Let's run that and check the return code.

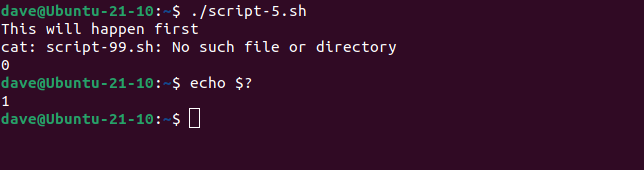

./script-5.sh

echo $?

The script halts and the second echo command isn't executed. The return code sent to the shell is one, correctly indicating a failure.

Catching Uninitialized Variables

Uninitialized variables can be difficult to spot in a real-world script. If we try to echo the value of an uninitialized variable, echo simply prints a blank line. It doesn't raise an error message. The rest of the script will continue to execute.

This is script-6.sh.

#!/bin/bash

set -eo pipefail

echo "$notset"

echo "Another echo command"

We'll run it and observe its behavior.

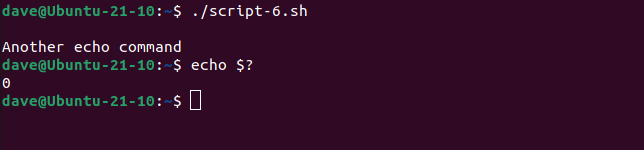

./script-6.sh

echo $?

The script steps over the uninitialized variable, and continues to execute. The return code is zero. Trying to find an error like this in a very long and complicated script can be very difficult.

We can trap this type of error using the set -u (unset) option. We'll add that to our growing collection of set options at the top of the script, save it as "script-7.sh", and make it executable.

#!/bin/bash

set -eou pipefail

echo "$notset"

echo "Another echo command"

Let's run the script:

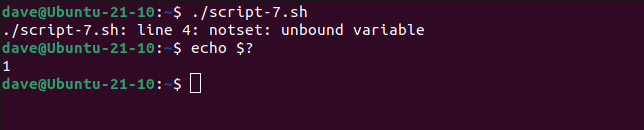

./script-7.sh

echo $?

The uninitialized variable is detected, the script halts, and the return code is set to one.

The -u (unset) option is intelligent enough not to be triggered by situations where you can legitimately interact with an uninitialized variable.

In "script-8.sh", the script checks whether the variable New_Var is initialized or not. You don't want the script to stop here, in a real-world script you'll perform further processing and deal with the situation yourself.

Note that we've added the -u option as the second option in the set statement. The -o pipefail option must come last.

#!/bin/bash

set -euo pipefail

if [ -z "${New_Var:-}" ]; then

echo "New_Var has no value assigned to it."

fi

In "script-9.sh", the uninitialized variable is tested and if it is uninitialized, a default value is provided instead.

#!/bin/bash

set -euo pipefail

default_value=484

Value=${New_Var:-$default_value}

echo "New_Var=$Value"

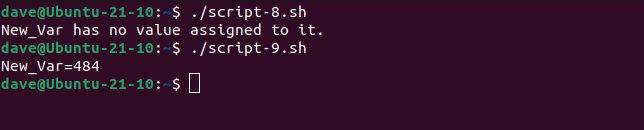

The scripts are allowed to run through to their completion.

./script-8.sh

./script-9.sh

Sealed With a x

Another handy option to use is the set -x (execute and print) option. When you're writing scripts, this is can be a lifesaver. it prints the commands and their parameters as they are executed.

It gives you a quick "rough and ready" form of execution trace. Isolating logic flaws and spotting bugs becomes much, much easier.

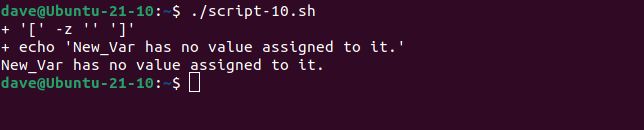

We'll add the set -x option to "script-8.sh", save it as "script-10.sh", and make it executable.

#!/bin/bash

set -euxo pipefail

if [ -z "${New_Var:-}" ]; then

echo "New_Var has no value assigned to it."

fi

Run it to see the trace lines.

./script-10.sh

Spotting bugs in these trivial example scripts is easy. When you start to write more involved scripts, these options will prove their worth.