In our age of digital media, we often take for granted the humble pixel. But what exactly is a pixel, and how did it come to be such an important part of our lives? We'll explain.

A Pixel Is a Picture Element

If you've used a computer, smartphone, or tablet, you've seen a pixel---or millions of them, in fact. Odds are very high that you're reading this sentence thanks to pixels right now. They form the words and images on your device's screen.

The word "pixel" originated as an abbreviation of the term "picture element," coined by computer researchers in the 1960s. A pixel is the smallest possible component of any electronic or digital image, regardless of resolution. In modern computers, they're usually square---but not always, depending on the aspect ratio of the display device.

Credit for the invention of the pixel usually goes to Russell Kirsch, who invented digital scanning techniques in 1957. In developing his scanner, Kirsch chose to translate areas of light and dark in a photograph into a grid of black and white squares. Technically, Kirsch's pixels could have been any shape, but square dots in a two-dimensional grid represented the cheapest and easiest technical solution at the time. Subsequent computer graphics pioneers built off of Kirsch's work, and the convention stuck.

Since then, some graphics pioneers such as Alvy Ray Smith have made a point to express the idea that a pixel is not actually a square---it's more abstract and fluid than that from a conceptual and mathematical standpoint. And he's correct. But for most people in most modern applications, a pixel basically is a colored digital square used to build a larger image similar to a tile in a mosaic or a stitch in needlepoint.

In the decades since the 1960s, pixels have become the linchpins of the digital domain, rendering the visual elements of word processors, websites, video games, high-definition television, social media, VR, and much more. With our current dependency on computerized tech, it's hard to imagine life without them. Pixels are as fundamental to computer graphics as atoms are to matter.

Raster vs. Vector Graphics

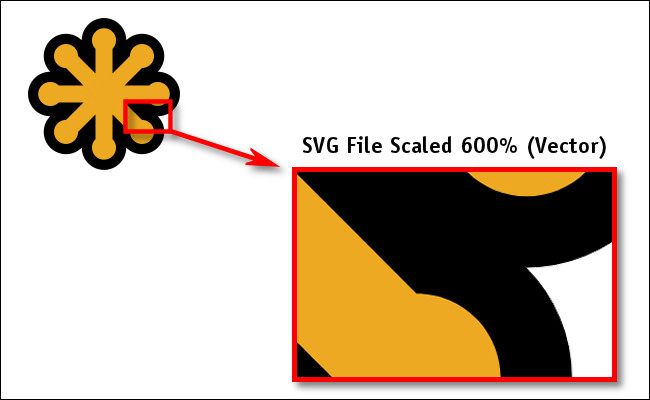

Pixels weren't always the only way to do digital art. Some 1960s computer graphics pioneers like Ivan Sutherland worked largely with calligraphic displays (often called "vector displays" today), which represented computer graphics as mathematical lines on an analog screen instead of discrete dots in a grid like a bitmap. To get him on record, we asked Sutherland about the meaning of the pixel.

"A pixel is a picture element," says Sutherland, who is now 84 and was one of the inventors of digital art and VR. "You can make it mean anything you like. In a raster display driven from a digital memory, it is the content of one memory cell. In a calligraphic display, it usually means the resolution of the D to A converters used."

Today, almost everyone uses bitmapped graphics with pixels on a grid, but vector art like the kind Sutherland pioneered lives on mathematically in file formats such as SVG, which preserve digital artwork as mathematical lines and curves that can scale to any size. To display vector art on a bitmapped screen, the mathematical formulas need to be converted to discrete pixels at some point. The higher the pixel density and the larger the display, the smoother the lines look when you display them as pixels on a grid.

How to Measure Pixels

Pixels are fluid things. They can be any size on a page or on a screen, but it's important to remember that alone, pixels are almost meaningless. Instead, they gain their strength in numbers. Imagine a single square pixel sitting alone, and you'll realize you can't draw many images with that.

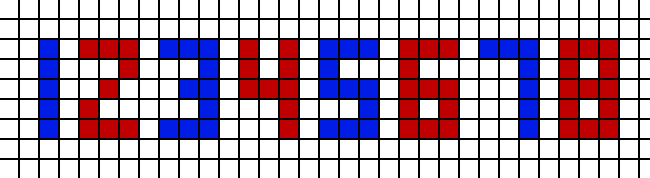

So one of the most important measurements of pixels is how many of them there are in an image, which is called "resolution." The higher the resolution of a grid of pixels, the more details of an image you can depict or "resolve" when a person looks at it.

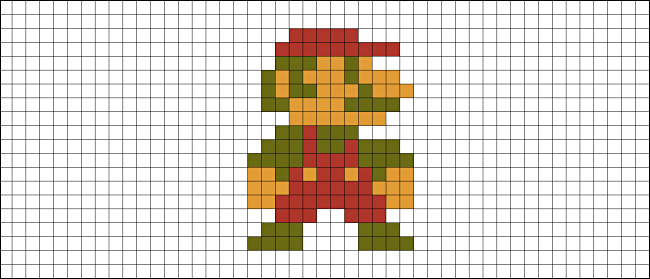

When a digital image isn't high resolution enough to resolve the details of an image you're trying to capture, the images can look "pixelated" or "jaggy." This is called aliasing, which is an information theory term that means losing information due to a low sampling rate (each pixel is a "sample" of an image, in this case). Look at the image of Mario above. At this low resolution (sampling rate), there isn't enough resolution to depict the fabric texture of Mario's clothing or the strands of Mario's hair. If you wanted to depict those features, the detail would be lost at this low resolution. That's aliasing.

To help reduce the effects of aliasing, computer scientists invented techniques called anti-aliasing, which can reduce the aliasing effect in some cases by blending the colors of nearby pixels to create the illusion of smooth curves, transitions, and lines.

Storing each pixel takes up memory, and in the early days of video games, when computer memory was expensive, game consoles couldn't store very many pixels at once. That's what makes older games look more pixelated than they do today. The same principle applies to digital images and video on computers, with image resolution increasing steadily over time as the price of memory (and the price of video processing chips) drops dramatically.

Today, we live in a digitally-driven world saturated with pixels. With bitmap resolutions constantly on the rise in monitors and TV sets (8K, anyone?), it's looking like we'll be using pixels for many decades to come. They are essential building blocks of our digital age.