Quick Links

On September 1st 2020, NVIDIA revealed its new lineup of gaming GPUs: the RTX 3000 series, based on their Ampere architecture. We'll discuss what's new, the AI-powered software that comes with it, and all the details that make this generation really awesome.

Meet the RTX 3000 Series GPUs

NVIDIA's main announcement was its shiny new GPUs, all built on a custom 8 nm manufacturing process, and all bringing in major speedups in both rasterization and ray-tracing performance.

On the low end of the lineup, there's the RTX 3070, which comes in at $499. It is a bit expensive for the cheapest card unveiled by NVIDIA at the initial announcement, but it's an absolute steal once you learn that it beats out the existing RTX 2080 Ti, a top of the line card which regularly retailed for over $1400. However, after NVIDIA's announcement, the third-party sale priced dropped, with a large number of them being panic sold on eBay for under $600.

There are no solid benchmarks out as of the announcement, so it's unclear if the card is really objectively "better" than a 2080 Ti, or if NVIDIA is twisting the marketing a bit. The benchmarks being ran were at 4K and likely had RTX on, which may make the gap look larger than it will be in purely rasterized games, as the Ampere-based 3000 series will perform over twice as well at ray tracing than Turing. But, with ray tracing now being something that doesn't hurt performance much, and being supported in the latest generation of consoles, it's a major selling point to have it running as fast as last gen's flagship for almost a third of the price.

It's also unclear if the price will stay that way. Third-party designs regularly add at least $50 to the price tag, and with how high demand will likely be, it won't be surprising to see it selling for $600 come October 2020.

Just above that is the RTX 3080 at $699, which should be twice as fast as the RTX 2080, and come in around 25-30% faster than the 3080.

Then, at the top end, the new flagship is the RTX 3090, which is comically huge. NVIDIA is well aware, and referred to it as a "BFGPU," which the company says stands for "Big Ferocious GPU."

NVIDIA didn't show off any direct performance metrics, but the company showed it running 8K games at 60 FPS, which is seriously impressive. Granted, NVIDIA is almost certainly using DLSS to hit that mark, but 8K gaming is 8K gaming.

Of course, there will eventually be a 3060, and other variations of more budget-oriented cards, but those usually come in later.

To actually cool the things, NVIDIA needed a revamped cooler design. The 3080 is rated for 320 watts, which is quite high, so NVIDIA has opted for a dual fan design, but instead of both fans vwinf placed on the bottom, NVIDIA has put a fan on the top end where the back plate usually goes. The fan directs air upward towards the CPU cooler and top of the case.

Judging by how much performance can be affected by bad airflow in a case, this makes perfect sense. However, the circuit board is very cramped because of this, which will likely affect third-party sale prices.

DLSS: A Software Advantage

Ray tracing isn't the only benefit of these new cards. Really, it's all a bit of a hack---the RTX 2000 series and 3000 series isn't that much better at doing actual ray tracing, compared to older generations of cards. Ray tracing a full scene in 3D software like Blender usually takes a few seconds or even minutes per frame, so brute-forcing it in under 10 milliseconds is out of the question.

Of course, there is dedicated hardware for running ray calculations, called the RT cores, but largely, NVIDIA opted for a different approach. NVIDIA improved the denoising algorithms, which allow the GPUs to render a very cheap single pass that looks terrible, and somehow---through AI magic---turn that into a something that a gamer wants to look at. When combined with traditional rasterization-based techniques, it makes for a pleasant experience enhanced by raytracing effects.

However, to do this fast, NVIDIA has added AI-specific processing cores called Tensor cores. These process all the math required to run machine learning models, and do it very quickly. They're a total game-changer for AI in the cloud server space, as AI is used extensively by many companies.

Beyond denoising, the main use of the Tensor cores for gamers is called DLSS, or deep learning super sampling. It takes in a low-quality frame and upscales it to full-native quality. This essentially means you can game with 1080p level framerates, while looking at a 4K picture.

This also helps out with ray-tracing performance quite a bit---benchmarks from PCMag show an RTX 2080 Super running Control at ultra quality, with all ray-tracing settings cranked to the max. At 4K, it struggles with only 19 FPS, but with DLSS on, it gets a much better 54 FPS. DLSS is free performance for NVIDIA, made possible by the Tensor cores on Turing and Ampere. Any game that supports it and is GPU-limited can see serious speedups just from software alone.

DLSS isn't new, and was announced as a feature when the RTX 2000 series launched two years ago. At the time, it was supported by very few games, as it required NVIDIA to train and tune a machine-learning model for each individual game.

However, in that time, NVIDIA has completely rewritten it, calling the new version DLSS 2.0. It's a general-purpose API, which means any developer can implement it, and it's already being picked up by most major releases. Rather than working on one frame, it takes in motion vector data from the previous frame, similarly to TAA. The result is much sharper than DLSS 1.0, and in some cases, actually looks better and sharper than even native resolution, so there's not much reason to not turn it on.

There is one catch---when switching scenes entirely, like in cutscenes, DLSS 2.0 must render the very first frame at 50% quality while waiting on the motion vector data. This can result in a tiny drop in quality for a few milliseconds. But, 99% of everything you look at will be rendered properly, and most people don't notice it in practice.

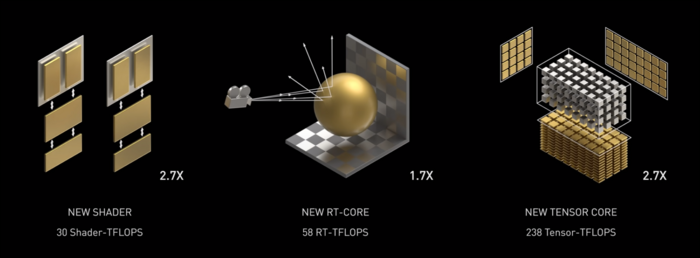

Ampere Architecture: Built For AI

Ampere is fast. Seriously fast, especially at AI calculations. The RT core is 1.7x faster than Turing, and the new Tensor core is 2.7x faster than Turing. The combination of the two is a true generational leap in raytracing performance.

Earlier this May, NVIDIA released the Ampere A100 GPU, a data center GPU designed for running AI. With it, they detailed a lot of what makes Ampere so much faster. For data-center and high-performance computing workloads, Ampere is in general around 1.7x faster than Turing. For AI training, it's up to 6 times faster.

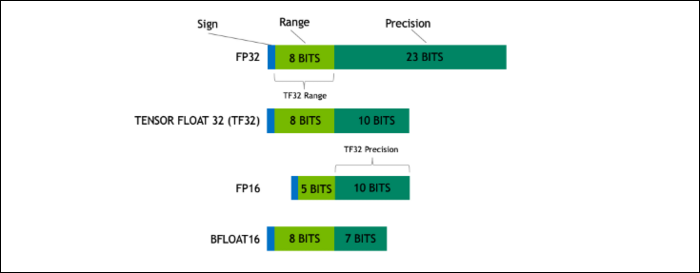

With Ampere, NVIDIA is using a new number format designed to replace the industry-standard "Floating-Point 32," or FP32, in some workloads. Under the hood, every number your computer processes takes up a predefined number of bits in memory, whether that's 8 bits, 16 bits, 32, 64, or even larger. Numbers that are larger are harder to process, so if you can use a smaller size, you'll have less to crunch.

FP32 stores a 32-bit decimal number, and it uses 8 bits for the range of the number (how big or small it can be), and 23 bits for the precision. NVIDIA's claim is that these 23 precision bits aren't entirely necessary for many AI workloads, and you can get similar results and much better performance out of just 10 of them. Reducing the size down to just 19 bits, instead of 32, makes a big difference across many calculations.

This new format is called Tensor Float 32, and the Tensor Cores in the A100 are optimized to handle the weirdly sized format. This is, on top of die shrinks and core count increases, how they're getting the massive 6x speedup in AI training.

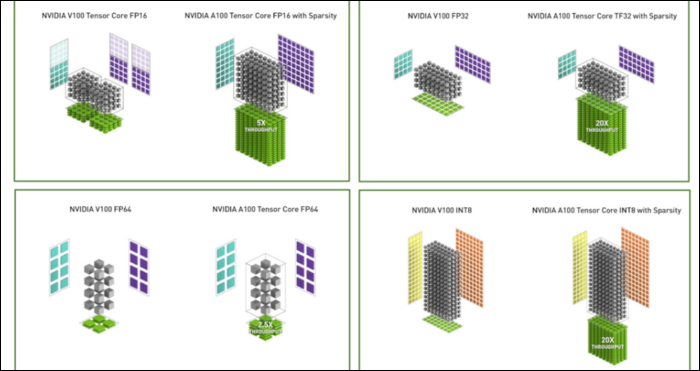

On top of the new number format, Ampere is seeing major performance speedups in specific calculations, like FP32 and FP64. These don't directly translate to more FPS for the layman, but they're part of what makes it nearly three times faster overall at Tensor operations.

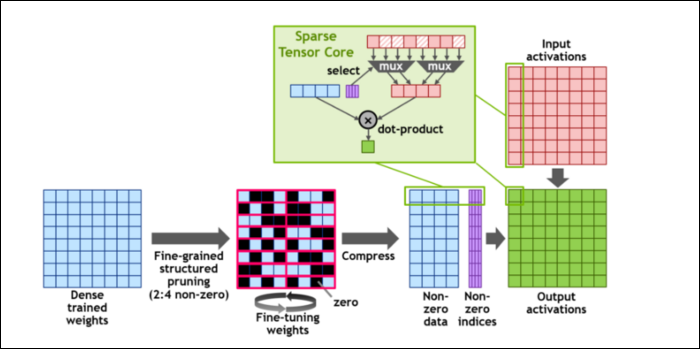

Then, to speed up calculations even more, they've introduced the concept of fine-grained structured sparsity, which is a very fancy word for a pretty simple concept. Neural networks work with large lists of numbers, called weights, which effect the final output. The more numbers to crunch, the slower it will be.

However, not all of these numbers are actually useful. Some of them are literally just zero, and can basically be thrown out, which leads to massive speedups when you can crunch more numbers at the same time. Sparsity essentially compresses the numbers, which takes less effort to do calculations with. The new "Sparse Tensor Core" is built to operate on compressed data.

Despite the changes, NVIDIA says that this shouldn't noticeably affect accuracy of trained models at all.

For Sparse INT8 calculations, one of the smallest number formats, the peak performance of a single A100 GPU is over 1.25 PetaFLOPs, a staggeringly high number. Of course, that's only when crunching one specific kind of number, but it's impressive nonetheless.