Quick Links

Use Linux pipes to choreograph how command-line utilities collaborate. Simplify complex processes and boost your productivity by harnessing a collection of standalone commands and turning them into a single-minded team. We show you how.

Pipes Are Everywhere

Pipes are one of the most useful command-line features that Linux and Unix-like operating systems have. Pipes are used in countless ways. Look at any Linux command line article---on any web site, not just ours---and you'll see that pipes make an appearance more often than not. I reviewed some of How-To Geek's Linux articles, and pipes are used in all of them, one way or another.

Linux pipes allow you to perform actions that are not supported out-of-the-box by the shell. But because the Linux design philosophy is to have many small utilities that perform their dedicated function very well, and without needless functionality---the "do one thing and do it well" mantra---you can plumb strings of commands together with pipes so that the output of one command becomes the input of another. Each command you pipe in brings its unique talent to the team, and soon you find you've assembled a winning squad.

A Simple Example

Suppose we have a directory full of many different types of file. We want to know how many files of a certain type are in that directory. There are other ways to do this, but the object of this exercise is to introduce pipes, so we're going to do it with pipes.

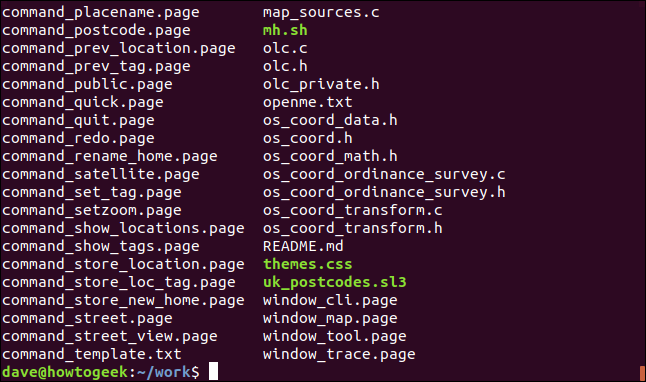

We can get a listing of the files easily using ls:

ls

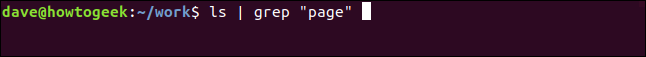

To separate out the file type of interest, we'll use grep. We want to find files that have the word "page" in their filename or file extension.

We will use the shell special character "|" to pipe the output from ls into grep.

ls | grep "page"

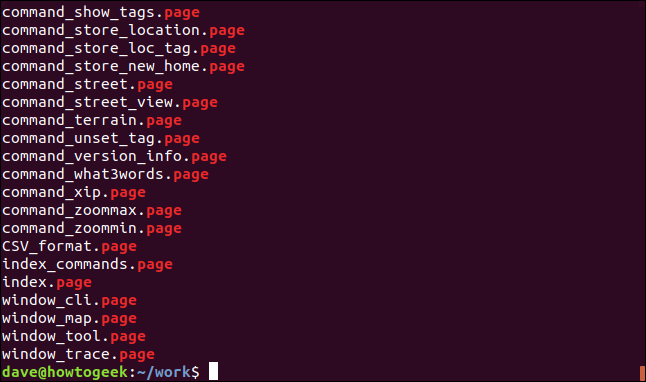

grep prints lines that match its search pattern. So this gives us a listing containing only ".page" files.

Even this trivial example displays the functionality of pipes. The output from ls was not sent to the terminal window. It was sent to grep as data for the grep command to work with. The output we see comes from grep, which is the last command in this chain.

Extending Our Chain

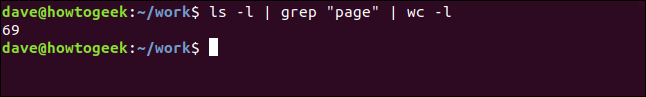

Let's start extending our chain of piped commands. We can count the ".page" files by adding the wc command. We will use the -l (line count) option with wc. Note we've also added the -l (long format) option to ls . We'll be using this shortly.

ls - | grep "page" | wc -l

grep is no longer the last command in the chain, so we don't see its output. The output from grep is fed into the wc command. The output we see in the terminal window is from wc. wc reports that there are 69 ".page" files in the directory.

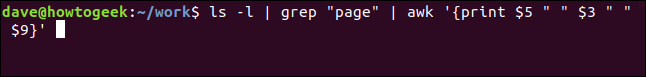

Let's extend things again. We'll take the wc command off the command line and replace it with awk. There are nine columns in the output from ls with the -l (long format) option. We'll use awk to print columns five, three, and nine. These are the size, owner, and name of the file.

ls -l | grep "page" | awk '{print $5 " " $3 " " $9}'

We get a listing of those columns, for each of the matching files.

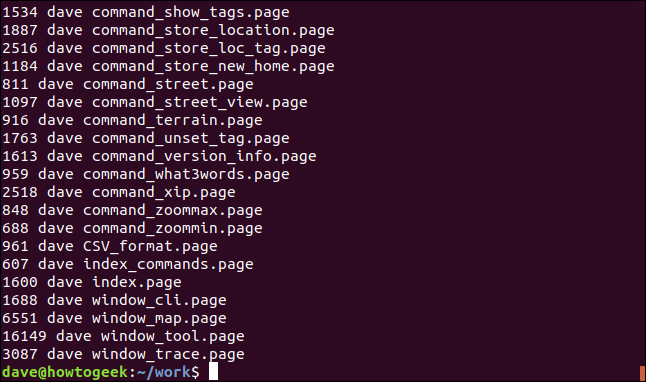

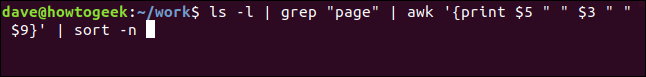

We'll now pass that output through the sort command. We'll use the -n (numeric) option to let sort know the first column should be treated as numbers.

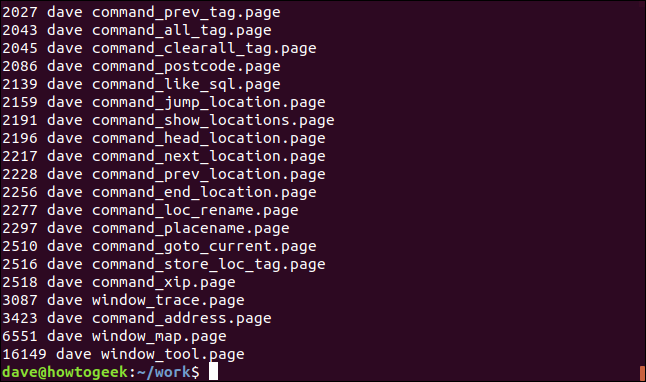

ls -l | grep "page" | awk '{print $5 " " $3 " " $9}' | sort -n

The output is now sorted in file size order, with our customized selection of three columns.

Adding Another Command

We'll finish off by adding in the tail command. We'll tell it to list the last five lines of output only.

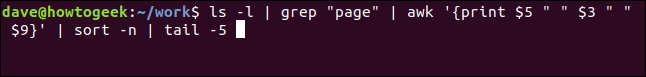

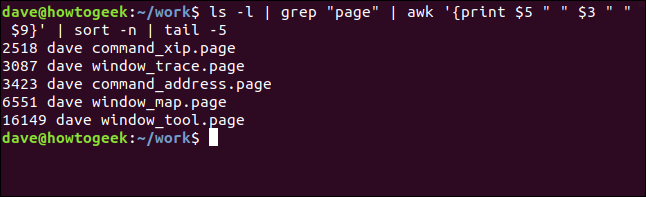

ls -l | grep "page" | awk '{print $5 " " $3 " " $9}' | sort -n | tail -5

This means our command translates to something like "show me the five largest ".page" files in this directory, ordered by size." Of course, there is no command to accomplish that, but by using pipes, we've created our own. We could add this---or any other long command---as an alias or shell function to save all the typing.

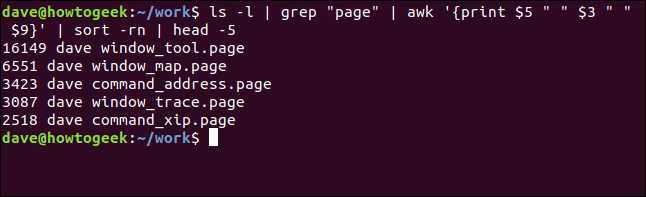

Here is the output:

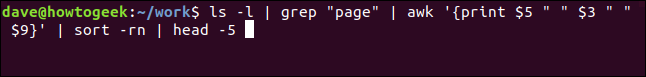

We could reverse the size order by adding the -r (reverse) option to the sort command, and using head instead of tail to pick the lines from the top of the output.

This time the five largest ".page" files are listed from largest to smallest:

Some Recent Examples

Here are two interesting examples from recent How-To geek articles.

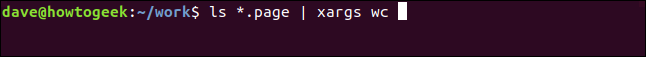

Some commands, such as the xargscommand, are designed to have input piped to them. Here's a way we can have wc count the words, characters, and lines in multiple files, by piping ls into xargs which then feeds the list of filenames to wc as though they had been passed to wc as command line parameters.

ls *.page | xargs wc

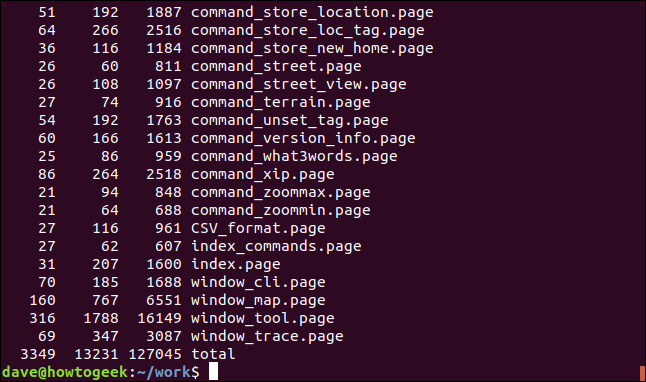

The total numbers of words, characters, and lines are listed at the bottom of the terminal window.

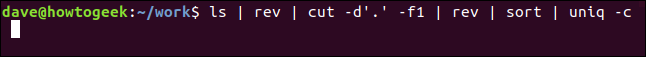

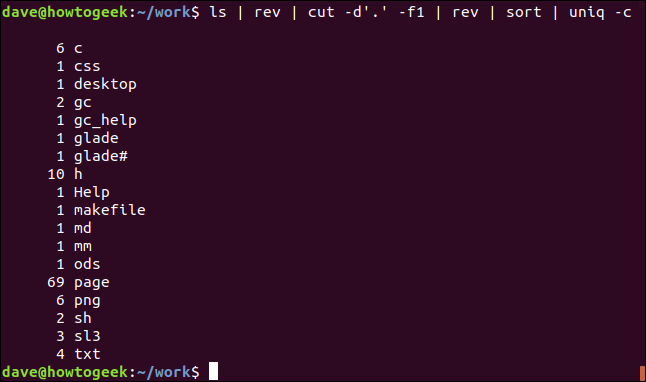

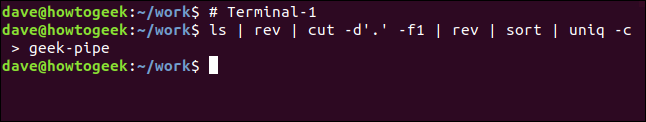

Here's a way to get a sorted list of the unique file extensions in the current directory, with a count of each type.

ls | rev | cut -d'.' -f1 | rev | sort | uniq -c

There's a lot going on here.

- ls: Lists the files in the directory

- rev: Reverses the text in the filenames.

- cut: Cuts the string at the first occurrence of the specified delimiter ".". Text after this is discarded.

- rev: Reverses the remaining text, which is the filename extension.

- sort: Sorts the list alphabetically.

- uniq: Counts the number of each unique entry in the list.

The output shows the list of file extensions, sorted alphabetically with a count of each unique type.

Named Pipes

There's another type of pipe available to us, called named pipes. The pipes in the previous examples are created on-the-fly by the shell when it processes the command line. The pipes are created, used, and then discarded. They are transient and leave no trace of themselves. They exist only for as long as the command using them is running.

Named pipes appear as persistent objects in the filesystem, so you can see them using ls. They're persistent because they will survive a reboot of the computer---although any unread data in them at that time will be discarded.

Named pipes were used a lot at one time to allow different processes to send and receive data, but I haven't seen them used that way for a long time. No doubt there are people out there still using them to great effect, but I've not encountered any recently. But for completeness' sake, or just to satisfy your curiosity, here's how you can use them.

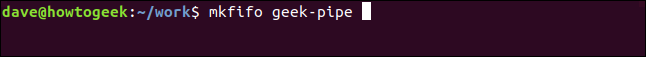

Named pipes are created with the mkfifo command. This command will create a named pipe called "geek-pipe" in the current directory.

mkfifo geek-pipe

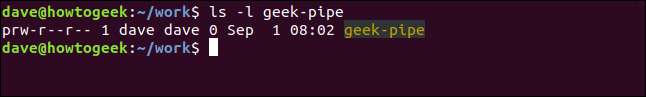

We can see the details of the named pipe if we use the ls command with the -l (long format) option:

ls -l geek-pipe

The first character of the listing is a "p", meaning it is a pipe. If it was a "d", it would mean the file system object is a directory, and a dash "-" would mean it is a regular file.

Using the Named Pipe

Let's use our pipe. The unnamed pipes we used in our previous examples passed the data immediately from the sending command to the receiving command. Data sent through a named pipe will stay in the pipe until it is read. The data is actually held in memory, so the size of the named pipe will not vary in ls listings whether there is data in it or not.

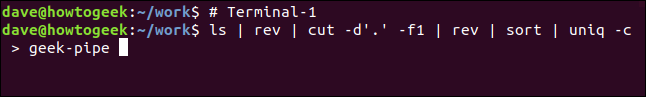

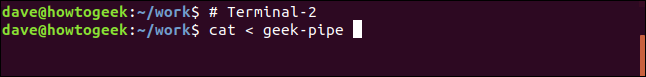

We're going to use two terminal windows for this example. I'll use the label:

# Terminal-1

in one terminal window and

# Terminal-2

in the other, so you can differentiate between them. The hash "#" tells the shell that what follows is a comment, and to ignore it.

Let's take the entirety of our previous example and redirect that into the named pipe. So we're using both unnamed and named pipes in one command:

ls | rev | cut -d'.' -f1 | rev | sort | uniq -c > geek-pipe

Nothing much will appear to happen. You may notice that you don't get returned to the command prompt though, so something is going on.

In the other terminal window, issue this command:

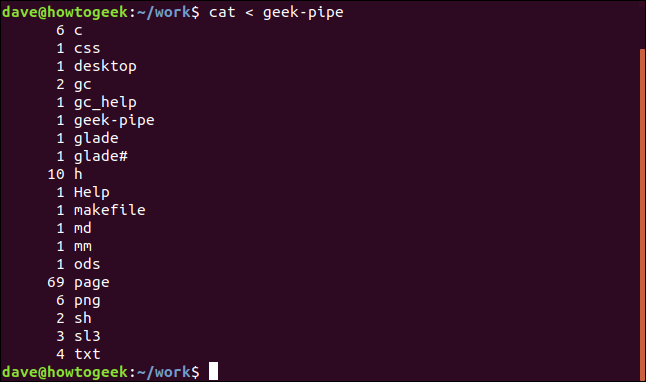

cat < geek-pipe

We're redirecting the contents of the named pipe into cat, so that cat will display that content in the second terminal window. Here's the output:

And you'll see that you have been returned to the command prompt in the first terminal window.

So, what just happened.

- We redirected some output into the named pipe.

- The first terminal window did not return to the command prompt.

- The data remained in the pipe until it was read from the pipe in the second terminal.

- We were returned to the command prompt in the first terminal window.

You may be thinking that you could run the command in the first terminal window as a background task by adding an & to the end of the command. And you'd be right. In that case, we would have been returned to the command prompt immediately.

The point of not using background processing was to highlight that a named pipe is a blocking process. Putting something into a named pipe only opens one end of the pipe. The other end isn't opened until the reading program extracts the data. The kernel suspends the process in the first terminal window until the data is read from the other end of the pipe.

The Power of Pipes

Nowadays, named pipes are something of a novelty act.

Plain old Linux pipes, on the other hand, are one of the most useful tools you can have in your terminal window toolkit. The Linux command line starts to come alive for you, and you get a whole new power-up when you can orchestrate a collection of commands to produce one cohesive performance.

Parting hint: It is best to write your piped commands by adding one command at a time and getting that portion to work, then piping in the next command.