Quick Links

Supercomputers were a massive race in the 90s, as the US, China, and others all competed to have the fastest computer. While the race has died down a bit, these monster computers still used to solve many of the world's problems.

As Moore's Law (an old observation stating that computing power doubles roughly every two years) pushes our computing hardware further, the complexity of the problems being solved increases as well. While supercomputers used to be reasonably small, nowadays they can take up entire warehouses, all filled up with interconnected racks of computers.

What Makes a Computer "Super"?

The term "Supercomputer" implies one gigantic computer many times more powerful than your simple laptop, but that couldn't be farther from the case. Supercomputers are made up of thousands of smaller computers, all hooked up together to perform one task. Each CPU core in a datacenter probably runs slower than your desktop computer. It's the combination of all of them that makes computing so efficient. There's a lot of networking and special hardware involved in computers of this scale, and it isn't as simple as just plugging each rack into the network, but you can envision them this way, and you wouldn't be far off the mark.

Not every task can be parallelized so easily, so you won't be using a supercomputer to run your games at a million frames per second. Parallel computing is usually good at speeding up very calculation-oriented computing.

Supercomputers are measured in FLOPS, or Floating Point Operations Per Second, which is essentially a measure of how quickly it can do math. The fastest one currently is IBM's Summit, which can reach over 200 PetaFLOPS, a million times faster than "Giga" most people are used to.

So What Are They Used For? Mostly Science

Supercomputers are the backbone of computational science. They're used in the medical field to run protein-folding simulations for cancer research, in physics to run simulations for large engineering projects and theoretical computation, and even in the financial field for tracking the stock market to gain an edge on other investors.

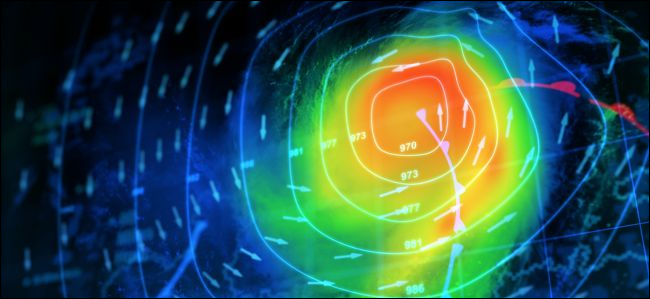

Perhaps the job that most benefits the average person is weather modeling. Accurately predicting whether you'll need a coat and an umbrella next Wednesday is a surprisingly hard task, one that even the gigantic supercomputers of today can't do with great accuracy. It's theorized that in order to run full weather modelling, we'll need a computer that measures its speed in ZettaFLOPS---another two tiers up from PetaFLOPS and around 5000 times faster than IBM's Summit. We likely won't hit that point until 2030, though the main issue holding us back isn't the hardware, but the cost.

The upfront cost for buying or building all of that hardware is high enough, but the real kicker is the power bill. Many supercomputers can use up millions of dollars worth of power every year just to stay running. So while there's theoretically no limit to how many buildings full of computers you could hook together, we only build supercomputers big enough to solve current problems.

So Will I Have a Supercomputer at Home in the Future?

In a sense, you already do. Most desktops nowadays rival the power of older supercomputers, with even the average smartphone having higher performance than the infamous Cray-1. So it's easy to make the comparison to the past, and theorize about the future. But that's largely due to the average CPU getting much faster over the years, which isn't happening as quickly anymore.

Lately, Moore's law has been slowing down as we reach the limits of how small we can make transistors, so CPUs aren't getting much faster. They are getting smaller and more power efficient, which pushes CPU performance in the direction of more cores per chip for desktops and more powerful overall for mobile devices.

But it's hard to envision the average user's problem set outgrowing computing needs. After all, you don't need a supercomputer to browse the Internet, and most people aren't running protein-folding simulations in their basements. The high end consumer hardware of today far exceeds normal use cases and is usually reserved for specific work that benefits from it, like 3D rendering and code compilation.

So no, you probably won't have one. The biggest advancements will likely be in the mobile space, as phones and tablets approach desktop levels of power, which is still a fairly good advancement.

Image Credits: Shutterstock, Shutterstock