Quick Links

Recently a demo from Epic, the makers of the Unreal game engine, raised eyebrows for its photo-realistic lighting effects. The technique is a big step forward for ray tracing. But what does that mean?

What Ray Tracing Does

Put simply, ray tracing is a method that a graphics engine uses to calculate how virtual light sources affect the items within their environment. The program literally traces the rays of light, using calculations developed by physicists who study the way real light behaves.

Graphics engines like Unreal or Unity use ray tracing to render realistic lighting effects---shadows, reflections, and occlusions---without needing to render them as their own individual objects. Though it's fairly intensive from a processing standpoint, using it to render only what the camera (i.e. the player) needs to see at any given moment means it can be more efficient than other, older methods of simulating realistic light in virtual environments. The specific lighting effects are rendered on a single two-dimensional plane at the viewer's perspective, not constantly all throughout the environment.

This is all achieved with some stupendously complex math, both in terms of actually determining the way the virtual light behaves and how much of these effects are visible to the viewer or player at any given time. Developers can use less complex versions of the same techniques to account for less powerful hardware or more fast-paced, smooth gameplay.

Ray tracing is a general approach to graphics rather than any specific technique, though it's been constantly refined and improved. It can be used in pre-rendered graphics, like the special effects seen in Hollywood movies, or in real-time engines, like the graphics you see in the middle of gameplay during a PC game.

What's New In Ray Tracing?

The demo that has gotten ray tracing into the news recently is the one in the video below, a short Star Wars sketch involving some stormtroopers with really bad timing. It was shown off at the Game Developer's Conference last week. It's been created by Epic Games (makers of the ubiquitous Unreal Engine) in partnership with NVIDIA and Microsoft to show off new ray tracing techniques.

Out of context, it's just a goofy video. But the important bit is that it's being rendered in real time, like a video game, not beforehand like a Pixar movie. The video below shows the presenter zooming the camera through the scene with real-time controls, something that's not possible with prerendered graphics.

Theoretically, if your gaming PC is powerful enough, it can generate graphics like that in any game using the new ray tracing lighting effects in the upcoming version of the Unreal demo.

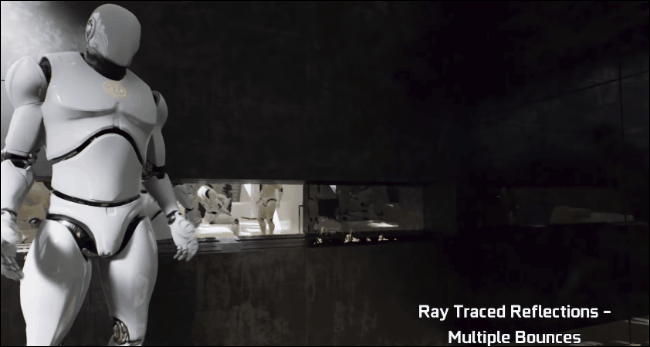

The technology really shines (get it?) because this specific demo includes a lot of reflective and mirrored surfaces with irregular geometry. Check out the way the environment is reflected in the curved panels of Captain Phasma's chrome-plated armor. Just as important, notice how it's reflected more dully and diffusely off of the white armor of the normal stormtroopers. This is a level of realistic lighting that isn't available in games today.

Will It Make My Games Look Awesome?

Well, yes---in very specific circumstances. This advanced level of ray tracing will make it easier for video games to render more impressive lighting effects, but it doesn't actually make the polygonal structure of the graphics more detailed. It doesn't boost the resolution of the textures, or enhance the fluidity of the animations. In short, it's going to make lighting look realistic, and that's about it.

The demo above is particularly dramatic because the developers chose characters and environments where almost every surface is either shining or reflecting light. If you use the same technology to render, say, the protagonist of The Witcher series riding his horse through the countryside, you won't see any majorly reflective surfaces except his sword and perhaps some water. Crucially, the ray tracing techniques won't do much to enhance the rendering of his skin, the horse's fur, the leather of his clothes, and so on.

The headlines that came from this demonstration claiming it would result in "blockbuster movie graphics" were a bit of hyperbole---that might be true if you're playing a level set in a hall of mirrors, but that's about it.

When Will I See This Stuff In My Games?

The GDC demonstration was an example of a proprietary ray tracing technique called RTX, now being developed by NVIDIA. It's set to debut in the next series of high-end GeForce graphics cards, currently rumored to debut later this year with the 20XX model numbers. Like other proprietary graphics tech, such as NVIDIA's PhysX, it probably won't be available to players using graphics cards from other manufacturers.

That being said, RTX is also using a new feature of the DirectX API system specifically for ray tracing (called raytracing by Microsoft). So while the specific demos above are a collaboration between Epic and NVIDIA, there's nothing stopping competing manufacturers like AMD and Intel from creating similar systems with similar results.

To put it simply, you'll see high-end PC games start to use these techniques towards the end of 2018 and beginning of 2019. Gamers who invest in new graphics cards around that time will see the most benefit, but if you already have a high-end gaming system, you might be able to use some of these effects in DirectX-compatible games on your current hardware.

Due to long development times and static hardware targets, console players won't be seeing these advanced graphics until the next round of game consoles is released in several years.

Image credit: NVIDIA, Epic/YouTube, Guru3D/YouTube