Quick Links

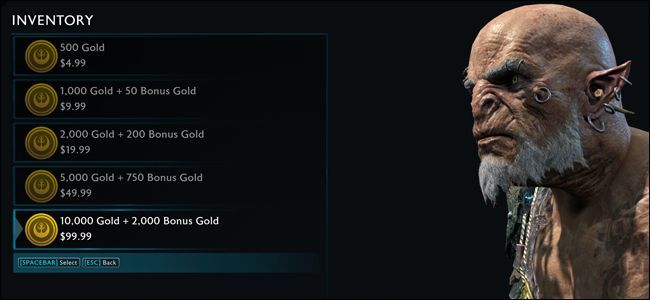

This weekend, while most of the technology and gaming press wasn't working on anything particularly important, Warner Bros. Interactive tried to slip a small news item past their attention. Middle-Earth: Shadow of War, the highly-anticipated sequel to the Tolkien-themed adventure game Middle-Earth: Shadow of Mordor, will include microtransactions. This $60 game---up to $100 USD if you spring for the special pre-order versions---will ask players to pay even more in bite-sized chunks to unlock some of its content faster.

It isn't the first time the small but infinitely extensible payments have jumped from free-to-play fare to the realm of full-priced PC and console releases. But for a variety of reasons, this one has been hit with instant and vocal backlash from gamers who were excited to take up Talion's fight against Sauron once again. For one, we're only two months out from release, and many gamers had already taken the bait of exclusive characters to pre-order the game (pre-order pushes and expensive bundles already being a casus belli for a lot of us) without being told about the microtransaction model the game would use. Another is that Warner Bros. Interactive has had a string of public relations failures with recent games, from the controversy surrounding YouTube reviews for the original Shadow of Mordor to the disastrous PC launch of Arkham Knight to the similar sequel-plus-loot box formula of Injustice 2.

But the bigger problem, for Warner Bros. and for gamers, is that there's a sense of fatigue that comes with every major new release that succumbs to this model. The nightmare scenario of paying extra to reload the bullets in your digital gun, famously proposed by an EA executive just a few years ago, seems to be upon us in many ways. The pay-to-win systems so indicative of some of the worst trends in mobile games are coming to the PC and consoles, in full-priced, major franchise releases, and there's nothing gamers can really do to stop it if we actually want to play those games.

The debate around the latest big release to lean on this model has been fierce. Some gamers are upset enough that they've cancelled their pre-orders and won't buy it at full (or any) price, others are disappointed in the game and the general trend but plan to buy it anyway, and a small but vocal minority are saying that it's not an important factor.

It is important, though. Pairing mobile, freemium-style microtransactions with a game at any price fundamentally alters both the way it's designed and the way it's played. Let's take a look at some of the justifications for microtransactions in full-priced games, and why they don't add up.

"Publishers and Developers Need the Extra Revenue"

No, they don't. This is especially untrue of the biggest and most flagrant users of microtransactions in full-priced games, EA, Activision-Blizzard, Ubisoft, and Warner Bros. Interactive. These companies bring in huge slices of the estimated $100 billion dollar gaming industry, and would get big slices no matter what their revenue models were on specific games.

Since the discussion is about Shadow of War, let's take a look at the numbers for its predecessor. For a AAA title from a major publisher, Shadow of Mordor was actually something of a surprise hit, with combined console and PC sales of approximately 6 million units according to VGChartz. At $60 a copy that would mean a revenue of roughly $360 million, but a lot of those copies were probably bought on sale, so let's cut the estimated revenue in half to $180 million. Assuming that Shadow of Mordor had a production budget on par with similar games like The Witcher 3, it would be somewhere in the $50 million range to produce. With perhaps another $30-40 million in marketing and distribution costs, the game still would have made its money back for Warner Bros. at least twice over.

So, to imply that the sequel to Shadow of Mordor "needs" any extra revenue stream is disingenuous. And again, it's hardly at the top of the high-budget gaming heap: the yearly installment of Call of Duty can be depended upon to make somewhere between $500 million and a billion dollars on its own, The Division sold over 7 million units for Ubisoft last year, and the FIFA 2017 soccer game sold over 15 million copies, making money at Hollywood blockbuster levels from initial sales alone. These are the extreme examples, of course, and every developer and publisher is expected to have its ups and downs, but to say that microtransactions are somehow unavoidable at the highest level of game sales is simply not true.

Oh, and The Division, FIFA 2017, and Call of Duty Infinite Warfare all included microtransactions, despite earning back their budgets multiple times over from conventional sales alone. EA's Ultimate Team modes for its sports games, which reward the biggest spenders on in-game digital currency, earns the company $800 billion a year. The takeaway is this: standard video game sales can earn a mind-boggling amount of money at the highest level, enough to make any company profitable. Adding microtransactions on top of that is simply a way to squeeze every possible dollar out of development. That's a really great thing if you're an EA stockholder...but not so much if you're a player.

"You Can Still Earn Everything In The Game Without Paying Extra"

This kind of reasoning often adorns some of the more exploitative free-to-play mobile games, and it's no less appealing when it shows up on a game with a $60 price tag. It's often repeated for games like Overwatch, and it even showed up in the official press release announcing Shadow of War's loot crate system.

Please note: No content in the game is gated by Gold. All content can be acquired naturally through normal gameplay.

That sounds fine, right? The only thing gained by the players who spend extra money is a little time. And indeed, that would be a fairly reasonable way to explain microtransactions and other paid extras...but the logic breaks down pretty quickly once you start to think about it.

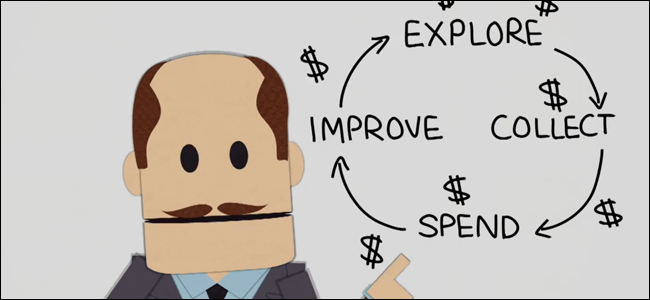

Video games take more than just technical skill when it comes to design, and more than conventional artistic prowess as well. There are practical aspects of game design that have evolved over the last few decades as the medium has grown. Things like skill balancing, a difficulty curve, or even a compulsion or "reward" loop, are relatively intangible concepts that nevertheless help determine a game's quality. And these elements are affected---in fact, can't help but be affected---when microtransactions are built in.

These ideas can incorporate the player's own skill, the danger of enemies, the frequency of rewards, and any number of other elements. But when you tie them in to a system that can be bypassed with real money, the progression is no longer exclusively reliant on time, or skill, or even blind luck. The developer and publisher now have a vested interest in changing the formula. And not so that the player doesn't get overwhelmed or bored by poorly-leveled enemies, and not so the player is motivated to continue with periodic rewards. The question now becomes, "how infrequently can we reward the player---enough that they'll continue to play the game, but not so frequently that they'll have no motivation to spend even more money to play through it faster?"

This is the core mechanic of pay-to-win mobile titles like Clash of Clans. The psychology behind these games is almost devious, giving early players frequent rewards to encourage them, tasking them with investing hours and hours in a free game to become competitive...and then hitting them with a skewed difficulty wall that's all but impossible to overcome without spending real money to hasten their progress and power up. Yes, technically everything in the game can be achieved by simply waiting long enough to earn it...but that wait quickly balloons to weeks or months as you grind away repetitively, unless you're willing to spend real money on upgrades.

Applying this logic to a multiplayer game, like Call of Duty or FIFA, has obvious flaws: whoever pays the most, the fastest, will get an advantage over other players with better gear or digital athletes. That's a disheartening prospect for anyone who's paid full price, especially if they had hoped to compete with online foes in some kind of leveled playing field.

But even in a single-player game, the mechanic itself is ripe for exploitation. A game with a finely-balanced progression system, dolling out rewards that keep the player both challenged and engaged, now has to serve both the core experience of the game itself and the publisher's ambitions to make as much money as possible. For a single-player game like Shadow of War, it might break the balance of the title altogether in an attempt to force the player into free-to-play-style payments for more natural progression...even after a $60 purchase.

"It's All Cosmetic, It Doesn't Affect Gameplay"

The rallying cry of cosmetic-only items is a popular one, especially for online multiplayer games where any perceived gameplay advantage for a paid extra is nearly instantly labelled as a "pay-to-win" mechanic. Restricting all paid upgrades to visual flair for players can be an easy way for developers to ease the concerns of would-be customers.

But even this system has some built-in problems. The same tendency to alter the core rewards of gameplay can affect it, artificially increasing the slow, grinding progress of players who won't pay to skip the tedium. The most prominent current game to use this model seems to have essentially built itself around this wait-or-pay system.

Take Overwatch and its loot boxes: technically, everything in the game can be earned by simply playing multiplayer matches, gaining experience points, and opening randomized boxes. Since the loot is random---as it almost always is in these kinds of systems---that progression is slow, with many duplicates of items one already has offering a roadblock to this theoretical endgame. Duplicates earn coins that can be spent towards specific pieces of cosmetic gear that players want, but the value of the coins is only a fraction of the value of the duplicate item, again, making that theoretical endgame farther and farther away. So the core progression mechanic in Overwatch, even if it's technically possible to earn everything without paying, is inexorably and intentionally designed to frustrate players just enough to make them spend real money on loot boxes (see above). It doesn't help that the system is stuffed with literally thousands of low-value items like sprays, one- or two-word voice lines, and player icons, making it all the harder to hit a rare skin or emote in the quasi-gambling randomized loot system.

Frequent in-game events, where even rarer and more expensive items are only available for a short time, all but force completionists to spend between three and a hundred dollars on randomized gear...in a game that they've already paid $40-60 to play. Because loot boxes are rewarded at each player level, and loot boxes are then intrinsically tied to the game's progress---indeed, they are the progression system for everything except the competitive ranked mode---it creates a meta-game that's all about spending time playing the most "profitable" game modes. Or, of course, paying to unlock purely cosmetic items even faster...but still being punished with the random loot-coin drop combo.

There's an even more flagrant abuser of this kind of system: Dead or Alive. The most risque of mainstream fighting series started way back on the PlayStation (the first one), tantalizing players with more than a dozen revealing costumes for its female polygonal fighters at a time when two or three would have been luxurious. The roster got longer and the skirts got shorter as the series progressed, with the character and costume unlocks basically functioning as the progression system in the otherwise balanced 3D fighter. But the fifth entry in the series, now having the full benefit of online play and years of DLC culture to draw upon, walled off a huge portion of these costumes behind in-game microtransactions (or, arguably, tiny portions of DLC). Hundreds of in-game costumes for the digital pin-ups are broken up into individual purchases or bundled packs, with the grand total of extras costing more than ten times the amount of the original game, a sequel to games that never required any extra money at all for the "full" experience.

Dead or Alive 5 and similar titles at least have the arguable virtue of giving their fans what they want for a set price, without the randomized, semi-gambling frustration of loot crates. But the point remains that once a developer decides to wall off portions of its game behind a paid system, even if the paid system doesn't technically affect gameplay, things soon get out of hand. There are examples of developers that respect their players and offer a more tempered balance between non-competitive paid extras and core gameplay, like Rocket League and Don't Starve. but they're becoming more and more infrequent, especially among the big names of modern gaming.

"If You Don't Like It, Don't Buy It"

The free market argument has been used by more than one developer to try to excuse its profiteering business model, and a fair bit of gamers have echoed it in their defense. And yes, at the end of the day, no one is forcing you to buy a game with a monetization system that you don't agree with. But that's small comfort to millions of gamers who enjoyed the deep orc army system of Shadow of Mordor, and are now faced with the choice to either play a game they've spent three years waiting for or do without to make an ideological stand. A stand which, if current AAA monetization trends continue, won't actually accomplish much of anything.

The "don't like it, don't buy it" argument was used when games started offering ridiculous pre-order bonuses as an incentive to help publishers boast in quarterly reviews. It was used when games started padding out their content, locking bits and pieces of gameplay that used to be included at no extra charge behind deluxe editions that cost $100 instead of $60. Now it's being used to defend billion-dollar publishers as they bring schemes from freemium mobile titles into the world of full-priced games.

Even the games that launch with no in-app purchases often add them further down the line, bolting on the same problems to a previously unaffected game: see The Division and Payday II (whose developers promised the games would be free of microtransactions), and even older titles like the remastered Call of Duty 4 or the seven-year-old Two Worlds II. Often games that underperform will be remade into a free-to-play title, forcing the few players who are still active to abandon their original purchase or adapt to a system they didn't sign up for when they bought the game. This is especially true of multiplayer shooters (see Battleborn and Evolve) and online RPGs.

Video games didn't always have hidden payments for parts of the game that should ostensibly have been free. We used to have cheat codes to skip the grind, or secret areas or unknown techniques for special items, or just possibly, developers with enough self-awareness not to bite the hands that fed them. Granted, this sort of "golden age" thinking isn't altogether helpful: the plain truth of the matter is that if today's always-connected Internet with its instant payment systems had been available in 1985, someone would have tried to charge for a dysentery cure in Oregon Trail. (That might be less of a joke than you think, by the way.)

If you don't like it, you can indeed not buy it. But before long you'll be severely self-limiting the games you do allow yourself to buy...and even the ones that you enjoy might switch over when the sequel comes out.

So What Should We Do?

Unfortunately, there appears to be very little that gamers or even the loudspeaker of the gaming press can actually accomplish to battle this trend. Each time it happens, forums and comment sections fill up with irate gamers who refuse to support an increasingly manipulative system. And more often than not, those games go on to sell millions of copies and make quite a bit of money off their microtransaction systems, too.

You can limit your purchases to games that have conventional, value-adding DLC (sprawling RPGs from Bethesda and Bioware, most recent Nintendo games, quite a lot of independent titles). Or simply stick to cheaper games and free-to-play fare, which has all the problems of a microtransaction economy but doesn't have the gall to ask you to pay up front. But eventually you’re probably going to run into a full-priced microtransaction game that you really want to play, forcing you to either fork up or miss out.

It's just faintly possible that governments could get involved. That's an avenue that's fraught with perils of its own, but in a few isolated cases it's at least provided consumers with some extra tools. China now requires developers to publish the odds of winning specific items in randomized, gambling-like systems such as Overwatch loot crates, and the European Commission has taken long, hard looks at the marketing for "free" games that try to make you pay at every turn. But it seems more or less impossible that any kind of laws will do anything except shed a little more light on some of the more deplorable practices of the modern gaming industry.

I'm sorry to end such an exhaustive evaluation of current trends on such a downer note. But if there's anything that the last ten years of gaming has taught us, it's that the biggest corporate players don't have anything approaching shame when it comes to inventing new ways to wring money out of their customers with the least amount of effort possible.

As the saying goes, you can't un-ring the bell---especially when it's the "DING" of a cash register. At the very least, be aware of the above methods of microtransactions, and why their justifications don't ring true. Being informed is the best way to keep from being ripped off...or at least being ripped off without knowing why.

Image credit: DualShockers, VG24/7