Quick Links

Apple is staking their reputation on ensuring the data it collects from you remains private. How? By using something called "Differential Privacy."

What Is Differential Privacy?

Apple explains it as such:

Apple is using Differential Privacy technology to help discover the usage patterns of a large number of users without compromising individual privacy. To obscure an individual’s identity, Differential Privacy adds mathematical noise to a small sample of the individual’s usage pattern. As more people share the same pattern, general patterns begin to emerge, which can inform and enhance the user experience.

The philosophy behind Differential Privacy is this: any one user whose device, whether it's an iPhone, iPad, or Mac, adds a computation to a larger pool of aggregate data (a big picture formed from varying smaller pictures), should not be revealed as the source, let alone what data they contributed.

Apple isn't the only company doing this, either---both Google and Microsoft were using it even earlier. But Apple popularized it by talking about it in detail at its 2016 WWDC keynote.

So how is this different from other anonymized data, you ask? Well, anonymized data can still be used to deduce personal information if you know enough about a person.

Let's say a hacker can access an anonymized database that reveals a company's payroll. Let's say they also know that Employee X is relocating to another area. The hacker then could simply query the database before and after Employee X moves and easily deduce his income.

In order to protect Employee X's sensitive information, Differential Privacy alters the data with mathematical "noise" and other techniques such that if you query the database, you will only receive an approximation of how much (or anyone else) Employee X was paid.

Therefore, his privacy is preserved due to the "difference" between the data supplied and the noise added to it, so it is then vague enough that it is virtually impossible to know whether that data you're looking at is actually a particular individual's.

How Does Apple's Differential Privacy Work?

Differential Privacy is a relatively new concept, but the idea is that it can give a company keen insights based on data from its users, without knowing what exactly that data says or from whom it originates.

Apple, for example, relies on three components to make its take on Differential Privacy work on your Mac or iOS device: hashing, subsampling, and noise injection.

Hashing takes a string of text and turns it into a shorter value with a fixed length and mixes these keys up into irreversibly random strings of unique characters or "hash". This obscures your data so the device isn't storing any of it in its original form.

Subsampling means that instead of collecting every word a person types, Apple will only use a smaller sample of them. For example, let's say you have a long text conversation with a friend liberally using emoji. Instead of collecting that entire conversation, subsampling might instead use only the parts Apple is interested in, such as the emoji.

Finally, your device injects noise, adding random data into the original dataset in order to make it more vague. This means that Apple gets a result that has been masked ever so slightly and therefore isn't quite exact.

All this happens on your device, so it has already been shortened, mixed up, sampled, and blurred before it is even sent to the cloud for for Apple to analyze.

Where Is Apple's Differential Privacy Used?

There are a variety of cases where Apple might want to collect data to improve its apps and services. Right now though, Apple is only using Differential Privacy in four specific areas.

- When enough people replace a word with a particular emoji, it will become a suggestion for everyone.

- When new words are added to enough local dictionaries to be considered commonplace, Apple will add it to everyone else's dictionary too.

- You can use a search term in Spotlight, and it will then provide app suggestions and open that link in said app or allow you to install it from the App Store. For example, say you search for "Star Trek", which suggests the IMDB app. The more people open or install the IMDB app, the more it's going to appear in everyone's search results.

- It will provide more accurate results for Lookup Hints in Notes. For example, say you have a note with the word "apple" in it. You do a lookup search and it gives you results not only for the dictionary definition, but also Apple's website, locations of Apple Stores, and so forth. Presumably, the more people tap on certain results, the higher and more often they'll appear in the Lookup for everyone else.

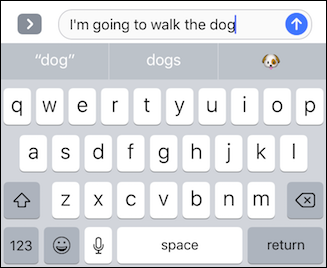

Let's use emojis as an example. In iOS 10, Apple introduced a new emoji replacement feature on iMessage. Type the word "love," and you can replace it with a heart emoji. type the word "dog," and---you guessed it---you can replace it with a dog emoji.

Similarly, it's possible for your iPhone to predict what emoji you want such that, if you're typing a message "I'm going to walk the dog" your iPhone will helpfully suggest the dog emoji.

So, Apple takes all those little pieces of iMessage data it collects, examines them as a whole, and can deduce patterns from what people are typing and in what context. This means your iPhone can give you smarter choices because it benefits from all those text conversations others are creating and thinks, "this is probably the emoji you want."

It Takes a Village (of Emoji)

The downside to Differential Privacy is that it doesn't provide accurate results in small samples. It's power lies in making specific data vague so it it cannot be attributed to any one user. In order for it to work and work well, many users must participate.

It's kind of like looking at a bitmapped photo up extremely close. You're not going to be able to see what it is if you look at only a few bits, but as you step back and look at the whole thing, the picture becomes clearer and more defined, even if it isn't super high resolution.

Thus, in order to improve emoji replacement and prediction (among other things), Apple needs to collect iPhone and Mac data from around the world to give it an increasingly clearer picture of what people are doing and thus improve its apps and services. It turns to all this randomized, noisy, crowdsourced data, and mines it for patterns---such as how many users are using the peach emoji in place of "butt."

So, the power of Differential Privacy relies on Apple being able to examine large amounts of aggregate data, all the while ensuring that it is none the wiser about who is sending them that data.

How to Opt Out of Differential Privacy in iOS and macOS

If you're still not convinced that Differential Privacy is right for you, though, you're in luck. You can opt out right from your device's settings.

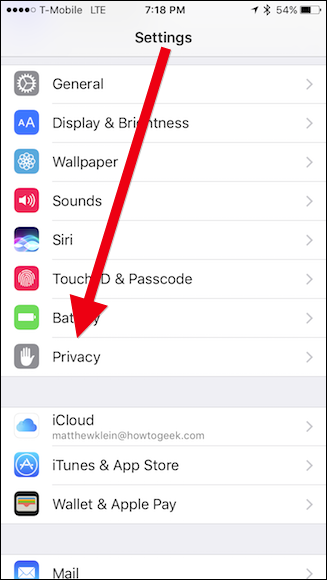

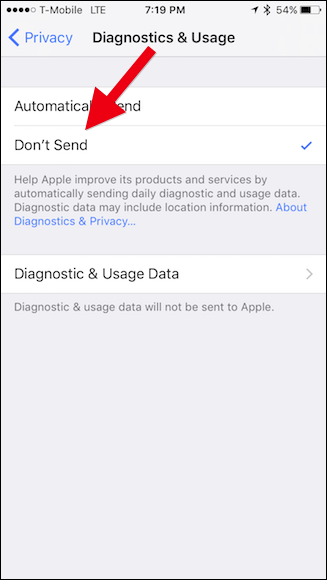

On your iOS device, tap open "Settings" and then "Privacy".

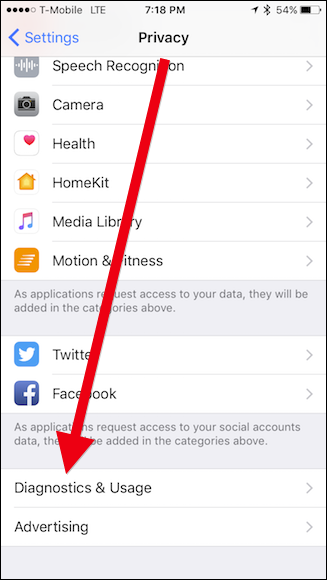

On the Privacy screen, tap "Diagnostics & Usage".

Finally, on the Diagnostics & Usage screen, tap "Don't Send".

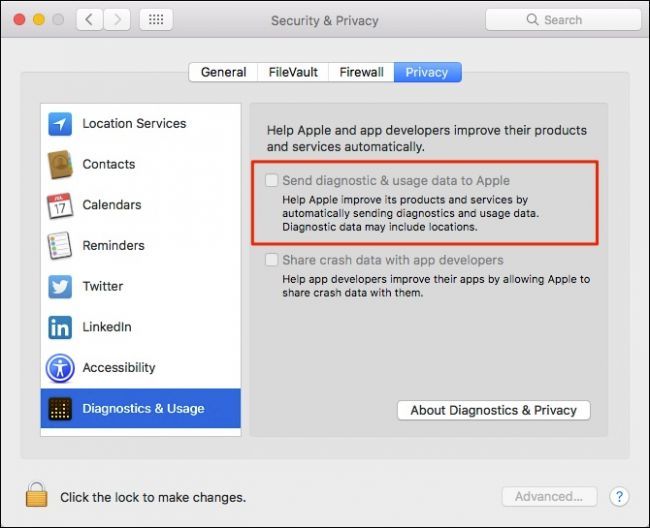

On macOS, open the System Preferences and click "Security & Privacy".

In the Security & Privacy preferences, click the "Privacy" tab and then make sure "Send diagnostic & usage data to Apple" is unchecked. Note that you will need to click the lock icon in the lower-left corner and enter your system password before you can make this change.

Obviously, there's a lot more to Differential Privacy, both in theory and application, than this simplified explanation. The meat and potatoes of it rely heavily on some serious mathematics and as such, it can get pretty weighty and complicated.

Hopefully, however, this gives you an idea of how it works and that you feel more confident about companies collecting certain data without fear of being identified.