Quick Links

PowerShell 3 has a lot of new features, including some powerful new web-related features. They dramatically simplify automating the web, and today we are going to show you how you can extract every single link off a webpage, and optionally download the resource if you so wish.

Scraping The Web With PowerShell

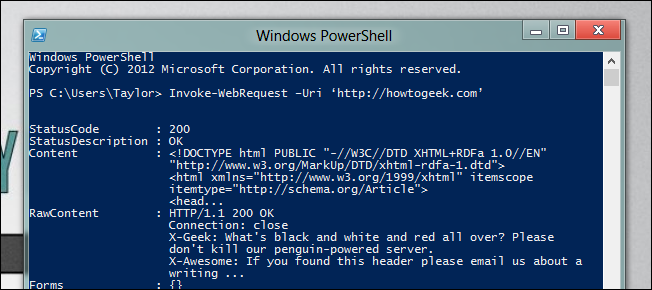

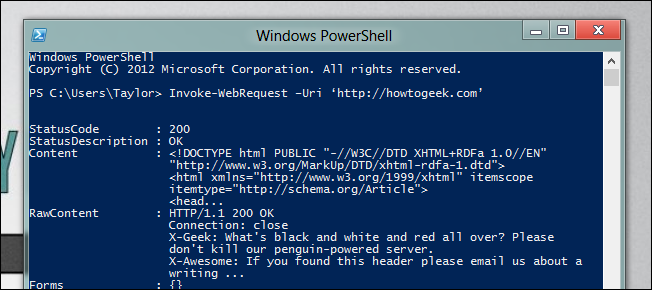

There are two new cmdlets that make automating the web easier, Invoke-WebRequest which makes parsing human readable content easier, and Invoke-RestMethod which makes machine readable content easier to read. Since links are part of the HTML of a page they are part of the human readable stuff. All you have to do to get a webpage is use Invoke-WebRequest and give it a URL.

Invoke-WebRequest –Uri ‘http://howtogeek.com’

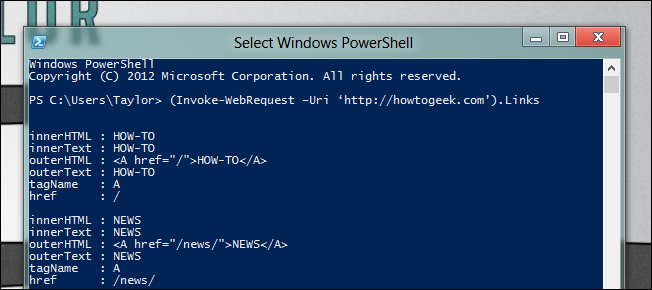

If you scroll down you will see the response has a links property, we can use PowerShell 3’s new member enumeration feature to filter these out.

(Invoke-WebRequest –Uri ‘http://howtogeek.com’).Links

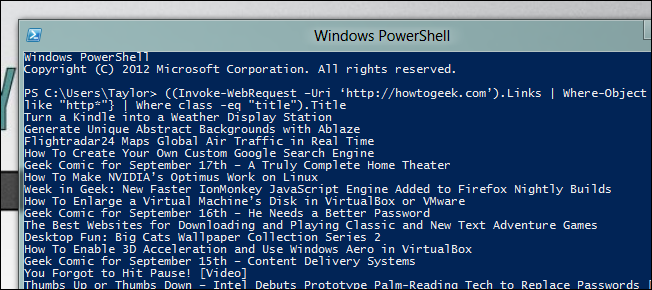

As you can see you get a lot of links back, this is where you need to use your imagination to find something unique to filter out the links you are looking for. Lets suppose we want a list of all articles on the front page.

((Invoke-WebRequest –Uri ‘http://howtogeek.com’).Links | Where-Object {$_.href -like "http*"} | Where class -eq "title").Title

Another great thing you can do with the new cmdlets is automate everyday downloads. Lets look at automatically scraping the the image of the day off the Nat Geo website, to do this we will combine the new web cmdlets with Start-BitsTransfer.

$IOTD = ((Invoke-WebRequest -Uri 'http://photography.nationalgeographic.com/photography/photo-of-the-day/').Links | Where innerHTML -like "*Download Wallpaper*").href

Start-BitsTransfer -Source $IOTD -Destination C:\IOTD\

That’s all there is to it. Have any neat tricks of your own? Let us know in the comments.