"Resolution" is a term people often throw around---sometimes incorrectly---when talking about images. This concept is not as black and white as "the number of pixels in an image." Keep reading to find out what you don't know.

As with most things, when you dissect a popular term like "resolution" to an acedemic (or geeky) level, you find that it's not as simple as you might have been led to believe. Today we're going to see just how far the concept of "resolution" goes, briefly talk about the implications of the term, and a little bit about what higher resolution means in graphics, printing, and photography.

So, Duh, Images Are Made of Pixels, Right?

Here's the way you've probably had resolution explained to you: images are an array of pixels in rows and columns, and images have a predefined number of pixels, and bigger images with bigger number of pixels have better resolution... right? That's why you're so tempted by that 16 megapixel digital camera, because lots of pixels is the same as high resolution, right? Well, not exactly, because resolution is a little bit murkier than that. When you talk about an image like it's only a bucket of pixels, you ignore all the other things that go into making an image better in the first place. But, without a doubt, one part of what makes an image "high resolution" is having a lot of pixels to create a recognizable image.

It can be convenient (but sometimes wrong) to call images with lots of megapixels "high resolution." Because resolution goes beyond the number of pixels in an image, it would be more accurate to call it an image with high pixel resolution, or high pixel density. Pixel density is measured in pixels per inch (PPI), or sometimes dots per inch (DPI). Because pixel density is a measure of dots relative to an inch, one inch can have ten pixels in it or a million. And the images with higher pixel density will be able to resolve detail better---at least to a point.

The somewhat misguided idea of "high megapixel = high resolution" is a sort of carryover from the days when digital images simply couldn't display enough image detail because there weren't enough of the little building blocks to make up a decent image. So as digital displays started to have more picture elements (also known as pixels), these images were able to resolve more detail and give a clearer picture of what was going on. At a certain point, the need for millions and millions of more picture elements stops being helpful, as it reaches the upper limit of the other ways that the detail in an image is resolved. Intrigued? Let's take a look.

Optics, Details, and Resolving Image Data

Another important part of the resolution of an image relates directly to the way it is captured. Some device has to parse and record image data from a source. This is the way most kinds of images are created. It also applies to most digital imaging devices (digital SLR cameras, scanners, webcams, etc) as well as analog methods of imaging (like film-based cameras). Without getting into too much technical gobbledygook about how cameras work, we can talk about something called "optical resolution."

Simply said, resolution, in regard to any kind of imaging, means "ability to resolve detail." Here's a hypothetical situation: you buy a fancy-pants, super high-megapixel camera, but have trouble taking sharp pictures because the lens is terrible. You just can't focus it, and It takes blurry shots that lack detail. Can you call your image high resolution? You might be tempted to, but you can't. You can think of this as what optical resolution means. Lenses or other means of gathering optical data have upper limits to the amount of detail they can capture. They can only capture so much light based on form factor (a wide angle lens versus a telephoto lens), as the factor and style of lens allows in more or less light.

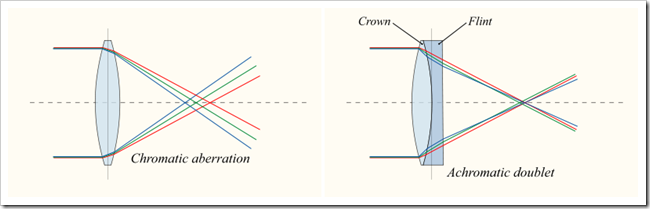

Light also has a tendency to diffract and/or create distortions of light waves called aberrations. Both create distortions of image details by keeping light from focusing accurately to create sharp pictures. The best lenses are formed to limit diffraction and therefore provide a higher upper limit of detail, whether the target image file has the megapixel density to record the detail or not. A Chromatic Aberration, illustrated above, is when different wavelengths of light (colors) move at different speeds through a lens to converge on different points. This means that colors are distorted, detail is possibly lost, and images are recorded inaccurately based on these upper limits of optical resolution.

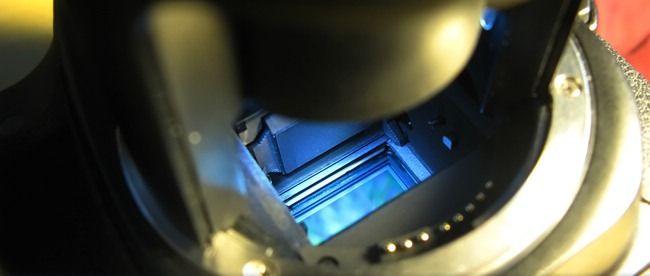

Digital photosensors also have upper limits of ability, although it's tempting to just assume that this only has to do with megapixels and pixel density. In reality, this is another murky topic, full of complex ideas worthy of an article of its own. It is important to keep in mind that there are weird trade-offs for resolving detail with higher megapixel sensors, so we'll go further in depth for a moment. Here's another hypothetical situation---you chunk out your older high-megapixel camera for a brand new one with twice as many megapixels. Unfortunately, you buy one at the same crop factor as your last camera and run into trouble when shooting in low light environments. You lose lots of detail in that environment and have to shoot in super fast ISO settings, making your images grainy and ugly. The trade off is this---your sensor has photosites, little tiny receptors that capture light. When you pack more and more photosites onto a sensor to create a higher megapixel count, you lose the beefier, bigger photosites capable of capturing more photons, which will help to render more detail in those low light environments.

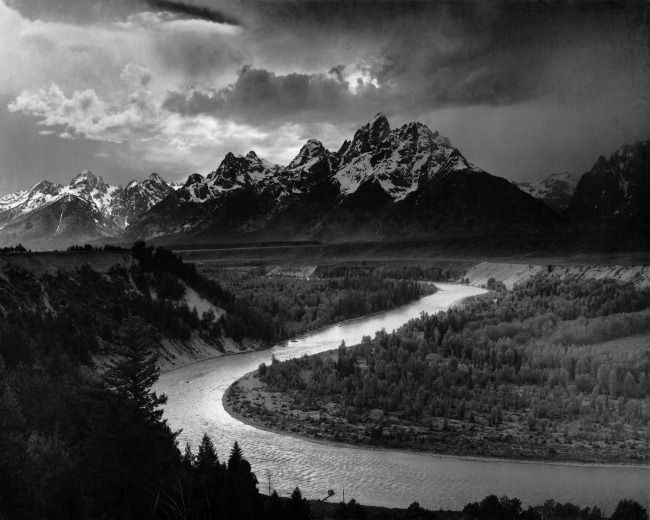

Because of this reliance on limited light-recording media and limited light-gathering optics, resolution of detail can be achieved through other means. This photo is an image by Ansel Adams, renown for his achievements in creating High Dynamic Range images using dodging and burning techniques and ordinary photo papers and films. Adams was a genius at taking limited media and using it to resolve the maximum amount of detail possible, effectively sidestepping many of the limitations we talked about above. This method, as well as tone-mapping, is a way to increase the resolution of an image by bringing out details that might otherwise not be seen.

Resolving Detail and Improving Imaging and Printing

Because "resolution" is such a broad-reaching term, it also has impacts in the printing industry. You're probably aware that advances in the past several years have made televisions and monitors higher definition (or at least made higher def monitors and televisions more commercially viable). Similar imaging technology revolutions have been improving the quality of images in print---and yes, this too is "resolution."

When we're not talking about your office inkjet printer, we're usually talking about processes that create halftones, linetones, and solid shapes in some kind of intermediary material used for transferring ink or toner to some kind of paper or substrate. Or, more simply put, "shapes on a thing that puts ink on another thing." The image printed above was most likely printed with some kind of offset lithography process, as were most of the color images in books and magazines in your home. Images are reduced to rows of dots and put onto a few different printing surfaces with a few different inks and are recombined to create printed images.

The printing surfaces are usually imaged with some kind of photosensitive material which has a resolution of its own. And one of the reasons that print quality has improved so drastically over the last decade or so is the increased resolution of improved techniques. Modern offset presses have increased resolution of detail because they utilize precise computer-controlled laser imaging systems, similar to the ones in your office variety laser printer. (There are other methods, as well, but laser is arguably the best image quality.) Those lasers can create smaller, more accurate, more stable dots and shapes, which create better, richer, more seamless, more high-resolution prints based on printing surfaces capable of resolving more detail. Take a moment to look at prints done as recently as those from the early 90s and compare them to modern ones---the leap in resolution and print quality is quite staggering.

Don't Confuse Monitors and Images

It can be quite easy to lump the resolution of images in with the resolution of your monitor. Don't be tempted, just because you look at images on your monitor, and both are associated with the word "pixel." It might be confusing, but pixels in images have variable pixel depth (DPI or PPI, meaning they can have variable pixels per inch) while monitors have a fixed number of physically wired, computer-controlled points of color that are used to display the image data when your computer asks it to. Really, one pixel is not related to another. But they can both be called "picture elements," so they both get called "pixels." Said simply, the pixels in images are a way of recording image data, while the pixels in monitors are ways to display that data.

What does this mean? Generally speaking, when you're talking about the resolution of monitors, you're talking about a far more clear-cut scenario than with image resolution. While there are other technologies (none of which we'll discuss today) that can improve image quality---simply put, more pixels on a display add to the display's ability to resolve the detail more accurately.

In the end, you can think of the images you create as having an ultimate goal---the medium you're going to use them on. Images with extremely high pixel density and pixel resolution (high megapixel images captured from fancy digital cameras, for instance) are appropriate for use from a very pixel dense (or "printing dot" dense) printing medium, like an inkjet or an offset press because there's a lot of detail for the high resolution printer to resolve. But images intended for the web have much lower pixel density because monitors have roughly 72 ppi pixel density and almost all of them top out around 100 ppi. Ergo, only so much "resolution" can be viewed on screen, yet all of the detail that is resolved can be included in the actual image file.

The simple bullets point to take away from this is that "resolution" is not as simple as using files with lots and lots of pixels, but is usually a function of resolving image detail. Keeping that simple definition in mind, simply remember that there are many aspects to creating a high resolution image, with pixel resolution being only one of them. Thoughts or questions about today's article? Let us know about them in the comments, or simply send your questions to ericgoodnight@howtogeek.com.

Image Credits: Desert Girl by bhagathkumar Bhagavathi, Creative Commons. Lego Pixel art by Emmanuel Digiaro, Creative Commons. Lego Bricks by Benjamin Esham, Creative Commons. D7000/D5000 B&W by Cary and Kacey Jordan, Creative Commons. Chromatic Abbertation diagrams by Bob Mellish and DrBob, GNU License via Wikipedia. Sensor Klear Loupe by Micheal Toyama, Creative Commons. Ansel Adams image in public domain. Offset by Thomas Roth, Creative Commons. RGB LED by Tyler Nienhouse, Creative Commons.